Courrier des statistiques N7 - 2022

Courrier des statistiques N7 - 2022

Assessing students’ competences: a unique measurement process

Standardised assessments of students’ competences have now become an integral part of the public debate on education. Their results are used both to inform educational policies and to construct statistical indicators that feed into the monitoring tools used by educational stakeholders.

Building these instruments follows a rather singular approach, in that the object of measurement – the competence – is itself a construction, which does not pre-exist the measurement operation itself. This results in specific procedures and modelling, which are part of psychometrics, a field that is relatively unknown in France, even though it has a large part of its origins there.

This article aims to provide an overview of the methods used to measure students’ competences, emphasising their specific features and pointing out some enriching perspectives.

- Measuring skills: when the instrument creates its object

- Students’ competency levels: old question, recent answers

- Today, there is a vast system of standardised assessments...

- ... adapted to different uses

- ... and anchored in a robust methodological heritage

- What “construct” is being targeted?

- The design of the units of measurement or items

- The sampling of students

- Administration of the assessments

- Student involvement

- Marking the answers

- Some psychometric concepts around the notion of a latent variable

- Moving from the measurement units to the measurement scale

- A need for modelling to link observations to the latent variable

- Box 1. Probabilistic models to separate the skill level from the difficulty of the item

- How useful is this for Official Statistics?

- In perspective: assessing cross-curricular competences...

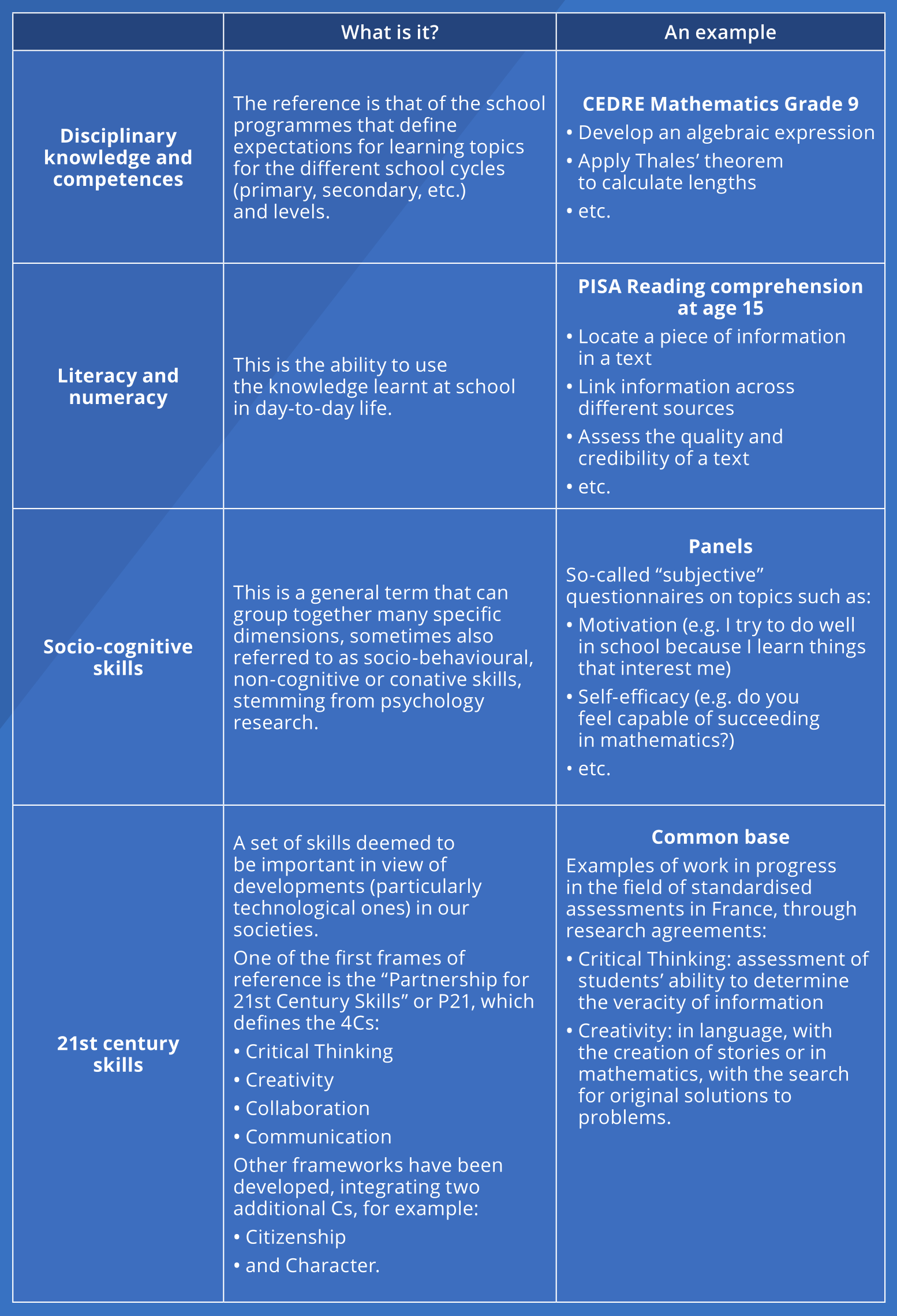

- Box 2. Standardised assessments cover a very wide variety of skill types

- ... and incorporating the digital revolution

- Box 3. Analysing students’ digital tracks

Measuring skills: when the instrument creates its object

What is the competency level of the students? How does it change? How do French students compare to their peers in other countries? Beyond school, to what extent are their skills suitable for employment? These questions receive a great deal of attention, which is only natural. They generate comments and debates, both in the media and among professionals and specialists, which are not always free of prejudice. They therefore call for objective responses based on statistics and are thus a challenge for Official Statistics.

However, what is a skill level and how can it be measured?

Multiple aspects of the measurement process are relatively traditional in the field of statistical surveys. However, the very nature of the variable measured sets these assessments apart in a very unique manner, as the skills are not observed directly. Only the manifestations of skills are observable. These manifestations will be the results of a standardised test, for example: the assumed existence of the target skill is then shown by passing the test – more precisely, by the aggregation of the results of each test item.

In a way, it could be argued that it is the measurement operation itself that defines the object of the measurement in concrete terms. Moreover, in the field of psychometrics, which is concerned with the measurement of psychological dimensions in general, the term “construct” is used to refer to the object being measured: logical intelligence, reading, working memory, etc. Hence the famous quip attributed to Alfred Binet, the inventor of the discipline in France at the beginning of the 20th century: when asked “What is intelligence?”, he is claimed to have responded “That is what my test measures.”.

Of course, the majority of statistics can be considered a construct, based on conventions. However, it is possible to make a distinction in respect of those relating to the assessment of skills, in relation to the tangible nature of the variable concerned.

For example, academic success can be understood through “baccalaureate pass rate”, which can be measured directly as it is materialised by the award of a diploma, giving rise to an administrative act that can be accounted for (Evain, 2020). In turn, “dropping out of school” is a concept that must be based on a precise definition, chosen from a set of possible definitions: this conventional choice is an act of construction. Once the definition has been established, the calculation is most often based on the observation of existing administrative variables, such as non-re-enrolment in an educational institution.

By comparison, the measurement of students’ competences appears to be a fairly particular construction approach. The underlying idea of psychometrics is to postulate that a test measures performances, which are the concrete manifestation of an ability that is not directly observable. Thus, the object of the measurements is a latent variable. This approach is not unique to the field of cognition. This type of variable is also found in economics with the notion of propensity, in political science with the notion of opinion or in medicine with the notion of quality of life (Falissard, 2008).

This uniqueness is not merely conceptual; it has specific implications when it comes to objectively answering questions of public debates, such as students’ competency levels, using statistical indicators.

Students’ competency levels: old question, recent answers

Just over thirty years ago, the debate on the hypothetical decline in academic attainment raged, fuelled by questions regarding the need for transformation of the education system (can a mass system be successful?) or on the link between education and the economy (students’ skills as a lever in international economic competition? See Ouvrir dans un nouvel ongletGoldberg and Harvey (1983) in the United States). In France, Thélot (1992) and Baudelot and Establet (1989) then stressed the glaring lack of direct and objective measurements of students’ knowledge.

While some people were tempted to use exam statistics, these did not make it possible to identify changes in students’ competency levels. Indeed, each year the contents of the examination change and no rigorous comparison of their difficulty is carried out. Meaning that, for example, the comparison of two baccalaureate pass rates is not relevant for measuring a change over time: if the pass rate rises, is this because the required level is lower or because the students’ competency levels are better?

Until the 1990s, the only data available to measure trends were those from the “psycho‑technical” tests conducted during the “three days” organised by the Ministry of Defence. However, these tests did not concern all students.

The use of standardised tests then appeared to be the correct solution. This type of measurement system has its origins in France, in the work of Alfred Binet and his collaborators at the start of the 20th century: however, psychometrics is a discipline that remains very poorly understood in France today. Paradoxically, student assessments are very much present in the French education system, by means of frequent ongoing checks conducted by teachers. However, educational assessment studies, which have been carried out for almost a century, including in particular the Carnegie Commission’s work on the baccalaureate in 1936, show that teachers’ appraisals of their students are partly affected by subjectivity and may depend on factors other than the students’ skill levels (Piéron, 1963). The grading of students is thus likely to vary significantly depending on the characteristics of teachers, school contexts and the students themselves.

In contrast, in other countries, psychometrics is a field that has developed significantly, particularly in the United States, through themes such as scholastic meritocracy (ensuring fair treatment of students) and intelligence, a subject that has also led to certain ideological shifts (Gould, 1997).

Thus, despite strong recurring social demand, the issue of student skill levels and their evolution has long suffered from a lack of conceptual and methodological framing. The use of standardised assessment systems is relatively recent in the French statistical survey landscape.

Today, there is a vast system of standardised assessments...

On the basis of this observation, in the 1990s, the Directorate of Evaluation and Forecasting (direction de l’Évaluation et de la Prospective – DEP) of the Ministry of National Education conducted several studies aimed at measuring the evolution of students’ competences. The clear objective of this work was to respond to those arguing that the school system was failing, often nostalgic for a bygone school model. However, these first comparative surveys displayed some methodological weaknesses.

However, France had long-standing experience in student assessment campaigns, particularly through the national diagnostic assessments completed by all grade 3 and grade 6 students at the start of each school year between 1989 and 2007. However, these assessments did not allow for robust statistical comparisons over time. First, their primary objective, like that of all exams, was not to account for the evolution of students’ competency levels over time, but to serve as tools for identifying individual difficulties for teachers. Second, knowledge in the field of measurement in education, and in psychometrics more broadly, was not very widespread or commonplace; this observation is still current, although the experience of DEPP in this field has improved considerably over the past 20 or so years.

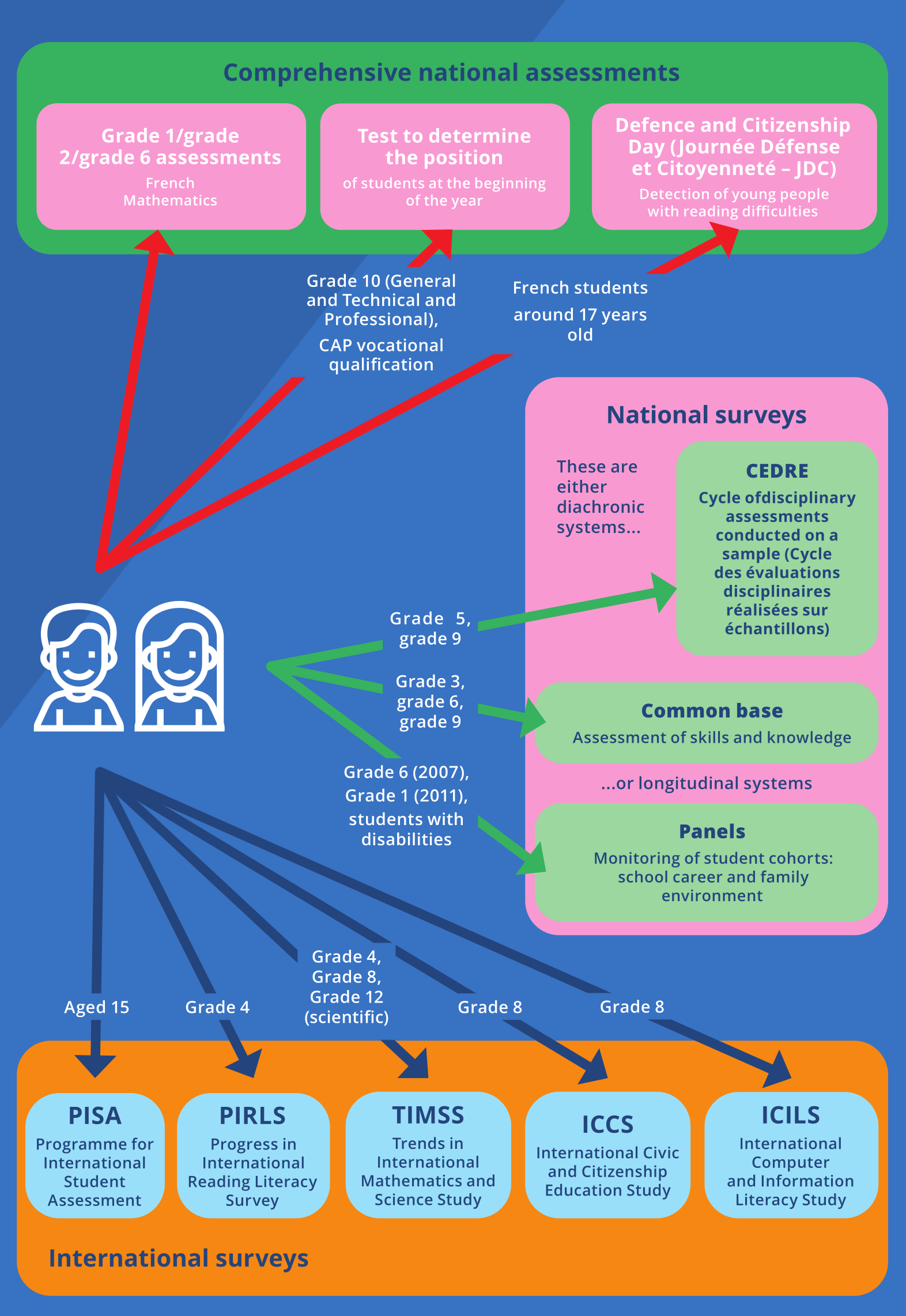

Gradually, other assessment systems created to allow diachronic comparisons have developed in France (Figure 1). Several relatively recent phenomena explain this increase and abundance. Firstly, the desire to create monitoring indicators, for managing the system, has become increasingly pervasive, particularly under the impetus of the Organic Law of Finance Laws, which involves the construction of annual results indicators, such as the percentage of students who acquire the expected skills, at different school levels.

Figure 1. A vast panorama of standardised assessments for French students

At the same time, international surveys, such as PISA (Ouvrir dans un nouvel ongletOECD, 2020), PIRLS or TIMSS (Ouvrir dans un nouvel ongletRocher and Hastedt, 2020) have contributed greatly to making large-scale assessments an essential part of

the public debate on schooling and of educational policy decisions. These days, very

few papers or discussions about education do not refer to international assessments.

Finally, since 2017, new comprehensive assessments have been put in place: these now apply to all students in grade 1, grade 2, grade 6 and grade 10, as well as those studying for the CAP in secondary school. The introduction of these assessments, which cover more than three million students at the start of each school year, has obviously contributed to the growth and visibility of such systems.

Thus, from the point of view of the producers of indicators in the fields of students competences , it has become essential to adopt an adapted methodological corpus making it possible to establish reliable measurements across time and space. However, the indicators produced feed different types of uses, making the optimal configuration of a skills assessment system more complex.

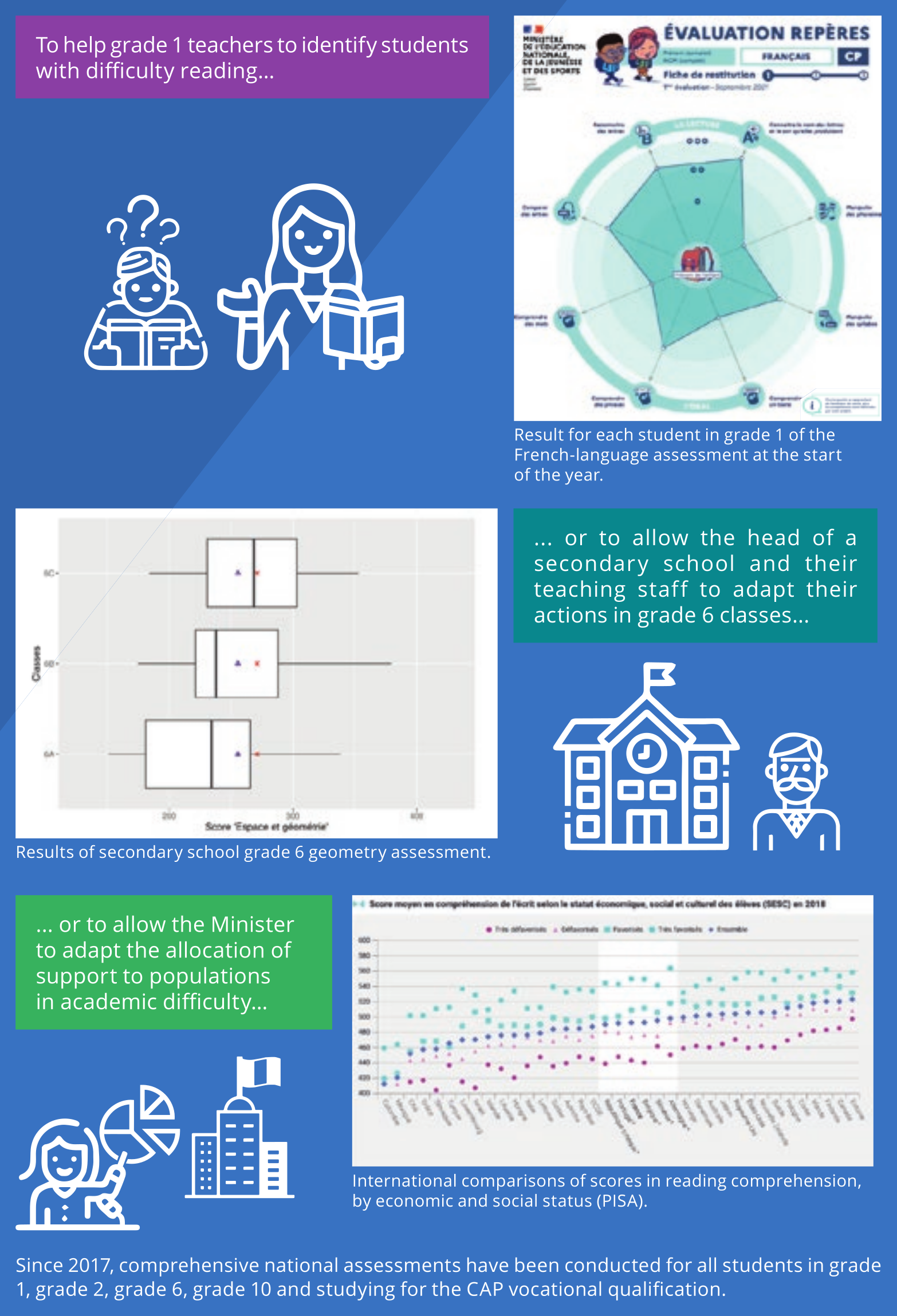

... adapted to different uses

Indeed, the uses of the results of standardised assessments of students’ skills have gone through various phases in their history, leading to a succession of different systems for forty years (Ouvrir dans un nouvel ongletTrosseille and Rocher, 2015). In particular, debates focus on the configuration of comprehensive national assessments, which concern all students at one or more school levels. The precise clarification of the objectives assigned to them is essential to the effectiveness of their implementation. By combining individual assessment (diagnosis of difficulty) and collective reporting (construction of statistical indicators), they meet different needs. This hiatus was already pointed out by Alfred Binet (Ouvrir dans un nouvel ongletRozencwajg, 2011), with the distinction between a “clinical” approach and a statistical approach.

Today, generally speaking, three different purposes are identified:

- to provide teachers with benchmarks of their students’ abilities, thus complementing their findings and enabling them to enrich their teaching practices. For example, at the beginning of grade 6, students take a reading fluency test (i.e. a test of reading speed), which is identical for all and calibrated in advance, making it possible to identify students likely to be at a disadvantage during their schooling;

- providing “local decision-makers” with indicators that allow them to better understand the results of schools and perform real regulation. For example, based on the results obtained from the national assessments, a local education officer can position their local education authority at the different levels of the students’ schooling (grade 1, grade 2, grade 6, grade 10), identify points of weakness and set up educational action systems;

- establishing indicators making it possible to measure the performance of the education system at national level, to assess any changes over time and to make international comparisons. For example, the cycle of disciplinary assessments conducted on a sample (cycle des évaluations disciplinaires réalisées sur échantillon – CEDRE) positions students relative to the expectations from school curricula, in a granular manner, which helps to inform reflection about possible adjustments to those curricula.

Comprehensive national assessments today, which are intentionally placed at the beginning of a school year, can both assist immediate educational action based on individual results, while also feeding into statistical tools for monitoring and guidance, particularly in respect of the results from the previous school level. At each level, stakeholders receive results that meet their specific needs (Figure 2).

Figure 2. Comprehensive national assessments provide indicators that are useful for various educational stakeholders

... and anchored in a robust methodological heritage

Regardless of the level at which they are used, the aim of assessment programmes is to establish an objective, scientific measurement of students’ abilities, which is as independent as possible of the conditions of observation, assessment performance and correction. In this sense, these assessments are “standardised”.

These operations are at the intersection of two methodological traditions: that of psychometrics, for aspects relating to measuring psychological dimensions, in this case cognitive abilities; and that of statistical surveys, for aspects relating to the data collection procedures.

While the latter tradition is widely shared in the field of official statistics, the tradition of psychometrics is much less known. However, the statistical models proposed in this field are very old (see the factor analyses of Spearman in 1905) and still give rise to abundant literature at international level. The dissemination of this methodological corpus within the framework of national systems undoubtedly owes a great deal to the influence of the major international assessments (Ouvrir dans un nouvel ongletRocher, 2015b). Participating countries – and active contributors – have benefited from acculturation to these methods, enabling them to perform a technology transfer in their national assessment systems.

These two traditions – statistical surveys and psychometrics – are convened and combined during the implementation of an assessment operation, which follows a specific process. Within the framework of the CEDRE (see above), this process is even the subject of external certification (Ouvrir dans un nouvel ongletsee the AFNOR website) based on a repository of service commitments made at each stage, thereby conferring upon the programme qualities of reproducibility and transparency.

The process takes place in six major stages, from the definition of the “construct” that is being measured to the production of checked and adjusted results.

What “construct” is being targeted?

The “construct”, i.e. the concept targeted by the measurement operation, forms the basis of the process. The construct is defined precisely in a conceptual framework. This conceptual framework may be based on a training plan or may refer to a cognitive theory. For example, the CEDRE measures students’ competences in each subject; it is thus based on what is deemed to have been taught in school across all school curricula. A radically different illustration can be given by identifying learning difficulties, such as dyslexia. The tests will then be developed to detect a shortcoming in relation to very specific aspects.

It is essential that this framework be as precise as possible, given the multiple definitions that can be given to a single object. For example, what do competences in mathematics entail? International assessments give us two very different visions. First, the IEA’s TIMSS assessment focuses on the way in which the teaching of mathematics is structured, to create an assessment based on fairly widely shared domains in the various curricula (numbers, geometry, problem solving, etc.). Thus, the assessment will often focus on “intra-mathematical” aspects. In turn, the PISA international survey focuses on the concept of “literacy”, i.e. individuals’ ability to apply their knowledge in real-life situations. It is then a matter of being able to move from the real world to the world of mathematics, and vice versa. The focus is therefore very structural subsequently, i.e. for the development of the instrument and for the interpretation of the results.

The conceptual framework also describes the structure of the object, or even the “universe of items”, i.e. all of the items (the smallest measurement item) that are supposed to measure the target dimension. It is this structuring that ultimately defines the object measured.

The design of the units of measurement or items

Once this framework is laid down, a group of designers – composed of teachers, inspectors and possibly researchers – is therefore responsible for building a broad set of items. As a product of collective work, they are debated until a consensus is reached.

The items are then subjected to experimentation, i.e. they are administered to one or more classes to estimate their difficulty and their minimum duration and to identify any reactions from the students.

The items that successfully make it through the experimentation stage are then tested by a sample of students representative of the target level (between 500 and 2000 students per item). This experimental phase takes place one year before the main phase of the assessment in order to respect the timing of the assessment in the academic calendar.

The sampling of students

For large national or international surveys (Figure 1), the samples are composed of several thousand students (between 4000 and 30,000 depending on the programmes and cycles). Typical survey issues arise, such as coverage definition, the sampling frames, sample drawing methods, etc. These aspects are documented in detail in the technical reports (Ouvrir dans un nouvel ongletBret et al., 2015; Ouvrir dans un nouvel ongletOECD, 2020), in particular concerning large international surveys which require the observance of many standards in this field: in particular, this involves maximising the rate of coverage of the target population and limiting exclusions (for example, territorial exclusions or those due to practical constraints).

Generally speaking, sampling procedures are conducted using two-step drawing of samples, first of the educational institutions (or of classes directly) and then of the students. Finally, given that multiple samples can be drawn from the same databases, coordination of the sample drawing is handled carefully (Ouvrir dans un nouvel ongletGarcia et al., 2015).

Administration of the assessments

In the context of sample-based surveys, the assessments are usually administered by personnel from outside the educational institution (for example: the tablet-based assessment of primary school students’ skills or the computer-based international TIMSS and PISA assessments in secondary schools and colleges).

For comprehensive national assessments in grade 1 and grade 2, the teachers administer the assessments. The instructions are extremely precise, but it is obvious, given that 45,000 teachers are involved, that a certain degree of variability in the administration conditions, which are difficult to quantify, affects the measurement error. For assessments in grade 6 and grade 10, however, the conditions are more favourable, thanks to the computer-based administration procedures, which ensure better standardisation.

Student involvement

As with any survey, it is important to consider the dispositions of the respondents. Standardised assessments remain low stakes for the students involved, even though they relate to increasing political challenges.

In the French education system, exam grades are of major importance. Therefore, when faced with an assessment that does not lead to a grade, it is worth considering the degree to which students are motivated. Based on CEDRE surveys, an experimental study showed that students were more invested when told in advance that the result would lead to a grade.

Marking the answers

Finally, the answers given by the students are either encoded automatically (for example, in the case of multiple-choice questions) or encoded by human correctors (for example, in the case of complex written output). In the case of human correction, a process of multiple corrections with adjudication is used. Indeed, it is a matter of neutralising the many correction biases that may appear and that have been documented for more than a century by docimology, the science of testing (Piéron, 1963).

Finally, psychometric analysis enables the identification of items that function poorly, for example, items that are not correlated with all items that are supposed to measure the same dimension. This process follows a very empirical customised approach, which involves establishing a coherent set of items most in line with the conceptual framework.

As an illustration, the CEDRE Sciences operation conducted an experiment in 2017 involving around 400 items, retaining 262 of them for the final assessment in 2018, 43 of which were identical to those used in the 2007 survey and 31 were identical to those used in the 2013 survey, so as to ensure comparisons over time (Ouvrir dans un nouvel ongletBret et al., 2015).

Some psychometric concepts around the notion of a latent variable

The elements that have just been presented are potentially also present in other areas covered by conventional statistical surveys. As we indicated in the introduction, the specific nature of the competences assessment programmes is more particularly related to the object being measured, the materiality of which is only revealed through the measuring instrument. It is thus agreed that the instrument makes it possible to observe performances, which are concrete manifestations of skill, a variable which is not directly accessible to us: this notion of a latent variable is central in psychometrics.

In order to provide an educational illustration of the broad notions of psychometrics, a classic example used focuses on the size of individuals (Figure 3). The situation is as follows: let's suppose we have no way of directly measuring the size of individuals in a given sample. However, we are able to offer a questionnaire, made up of questions with binary answers (yes/no) that do not directly reflect size. We thus artificially place ourselves in the situation of measuring a latent variable which we seek to grasp using a questionnaire, a measurement system that is apparently comparable to standardised assessment.

Figure 3. An illustration of the major concepts of psychometrics

This questionnaire allows for a concrete illustration of important concepts of psychometrics:

- validity: does the test actually measure what it is supposed to measure? In this case, the actual size of the individuals is strongly correlated with a score calculated on the basis of the 24 items. The score obtained therefore does represent the target latent variable;

- the dimensionality of a set of items: the calculation of a score assumes that the items measure the same dimension and that the test is one-dimensional. However, it is clear that the items presented here do not measure solely the size dimension, but each questions a multitude of dimensions. The idea is that a common preponderant factor links these items, a factor related to size. Different techniques exist to determine whether or not a test can be considered one-dimensional.

Moving from the measurement units to the measurement scale

When it comes to building the measurement scale, other concepts are used and can also be illustrated through our questionnaire on size:

- the differential item functioning: as an illustration, in response to the statement “When there are two of us using an umbrella, I am often the one who holds it”, 89% of men answer yes, compared with 52% of women, which is a difference of 37 points, while on average across all items, the difference between men and women is only 20 points. The question is deemed to be gender “biased”: the answer given depends on a group characteristic and not only on size. The study of differential functions is fundamental for comparisons over time or international comparisons, in order to know whether other factors contribute to success beyond skill level alone;

- the discriminating power of the items or the item-test correlation makes it possible to verify whether or not an item actually measures the dimension it is supposed to measure. For example, the item “In bed, I often have cold feet”, taken from a similar questionnaire in the Netherlands, is not correlated with the other items in the French sample. Thus, this item does not measure the size dimension in France but instead a different non-correlated dimension, such as sensitivity to the cold, etc.;

- the measurement scale: the questionnaire does not make it possible to determine the size of individuals, but simply to classify them according to a variable correlated with size, in this case a score obtained for the 24 items. It is thus possible to perform linear transformations using this score, which does not alter the relationships between the score intervals between individuals. Typically, the scores can be standardised with a mean of 0 and standard error of 1, but most often they are transformed to higher values (a mean of 250 and a standard error of 50 in CEDRE or a mean of 500 and a standard error of 100 in PISA) in order to avoid negative values.

A need for modelling to link observations to the latent variable

Viewing the results of an assessment as the result of a process of measuring a latent variable does not just happen by itself. Indeed, calculating scores in an assessment may seem trivial: counting the number of correct answers appears to be an appropriate indicator of skill level and it is quite possible to consider only the number of points and not give more meaning to this statistic than a score observed on a test.

However, this approach is highly frustrating from a theoretical point of view and quickly comes up against practical limits, as it struggles to make it possible to distinguish between that which is difficult to test and that which relates to the students’ skill levels. In particular, so as to ensure comparability between different populations or between different tests, the use of more suitable modelling, which is at the level of the items themselves and not at the level of the aggregate score, appeared necessary. In particular, item response models (or IRMs), created in the 1960s, are the norm in the field of large-scale standardised assessments (Box 1).

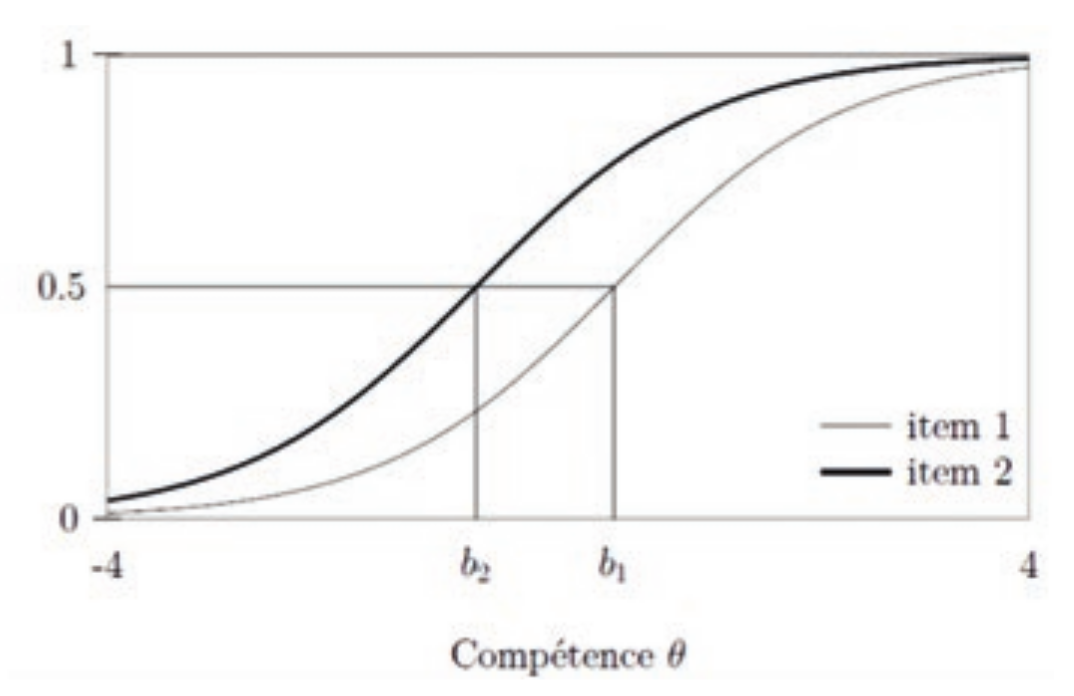

Box 1. Probabilistic models to separate the skill level from the difficulty of the item

For the same measurement object, the questions (items) that make up the measurement instrument can differ. Therefore, working on an aggregated score quickly comes up against limits and it is preferable to base the analysis on the most basic element, i.e. the item.

The item response models (IRMs), which were created in the 1960s, are a class of probabilistic models. They model the probability that a student will give a certain answer to an item, in accordance with parameters concerning the student and the item.

In the simplest model, proposed by Danish mathematician Georg Rasch in 1960, the probability Pij of the student i successfully completing item j is a sigmoid function of the competency level θi of the student i and of the level of difficulty bj of the item j. Since the sigmoid function is an increasing function (see Figure), it appears that the probability of success increases when the student’s competency level increases and that it decreases when the level of difficulty of the item increases, which clearly reflects the expected relationships between success, difficulty and skill level.

The advantage of this type of modelling, and which explains its success, is the separation of two key concepts, namely the difficulty of the item and the student’s competency level.

Thus, IRMs have a practical advantage for the creation of tests: if the model is well specified for a given sample, the parameters of the items can be considered fixed and applicable to other samples, from which it will be possible to infer the parameters relating to the students, in this case their skill level.

Another advantage is that the students’ competency level and the difficulty of the items are placed on the same scale. This property makes it possible to interpret the level of difficulty of the items through reconciliation with the competency continuum. Thus, students at a skill level equal to bj will have a 50% chance of successfully completing the item, which is visually reflected by the representation of the characteristic curves of the items according to this model.

Reading note: the probability of successfully completing the item (vertical axis)

depends on the competency level (horizontal axis). By definition, the difficulty parameter

of an item corresponds to the skill level that has a 50% chance of successfully completing

the item. Thus, item 1 shown via the thin line is more difficult than item 2 shown

via the thick line. The probability of successfully completing it is higher, regardless

of competency level.

These models make it possible to link the answers to the items and the target latent variable in a probabilistic manner. They are very useful when it comes to comparing the skill levels of different groups of students. This issue relates to the notion of metric adjustment (equating). It is a case of positioning students from different cohorts on the same skill scale, based on their results observed in partially different assessments. There are many existing techniques that are commonly used in standardised assessment programmes (Kolen and Brennan, 2004). Typically, comparisons are established on the basis of common items, re-used identically from one measurement time to another. The item response models then provide an appropriate framework, in so far as they make a distinction between the parameters of the items, which are considered fixed, and the parameters of the students, which are considered variable.

How useful is this for Official Statistics?

This statistical and psychometric apparatus enables the development of robust indicators of student ability levels and, above all, it enables comparisons across space and time. For example, the issue of the change in school skill level over time can be statistically addressed through the identical re-use of so-called anchoring items. Indeed, the identical re-use of all the items used in a previous survey is not necessarily relevant, in view of changes to school curricula, practices, cultural environment, etc. Some items need to be removed and others need to be added. Consequently, students from two different cohorts undergo a partially different test. Therefore, how is it possible to ensure the comparability of the results? The mere calculation of the number of correct answers is no longer relevant and the modelling presented here must be used. Thus, using this approach, CEDRE surveys have enabled comparisons of students’ mathematics skill levels for over ten years, which also show a worrying decline in results, in both primary and secondary school (Ouvrir dans un nouvel ongletNinnin and Pastor, 2020; Ouvrir dans un nouvel ongletNinnin and Salles, 2020).

For their part, international assessments use these methodologies to ensure the comparability of the difficulties of items from one country to another. Indeed, a strong assumption of these surveys is that the translation process does not change the difficulty of the item. There are strict translation control procedures in place. However, analyses show that the hierarchy of difficulty parameters for the questions asked is pretty much maintained for countries that share the same language, but that it can be distorted between two countries that do not speak the same language. The item response models make it possible to identify these potential sources of comparability bias. A specific example is provided by the TIMSS surveys, in which dozens of countries participate and which have recently shown the concerning position of France in mathematics, in a manner complementary to and consistent with CEDRE (Ouvrir dans un nouvel ongletColmant and Le Cam, 2020; Ouvrir dans un nouvel ongletLe Cam et Salles, 2020).

In perspective: assessing cross-curricular competences...

Assessment programmes are facing new possibilities, linked with demand for more complex skills assessment and the rise of digital technologies.

Thus, in addition to the methodological difficulties discussed above, there are new challenges posed by growing demand (partly from the economic world) for the measurement of dimensions that are far more complex than traditional academic skills. People sometimes speak of cross-curricular competences, of soft skills, 21st century skills, socio-cognitive skills, etc. (Box 2).

Assessing these dimensions is a real challenge. Indeed, the definition of these skills

(framework) is not always very strong or agreed upon. Furthermore, they are not always

taught explicitly, which calls into question the significance of the assessment results.

Finally, their structure is complex: they usually involve cognitive dimensions, as

well as attitudes, dispositions, etc. For example, assessing critical thinking may

involve multiple intertwined dimensions, such as comprehension, metacognitive components,

curiosity, etc. Even if each component is potentially assessable, their juxtaposition

prevents identification of a degree of critical thinking. More “holistic” systems

must be imagined.

... and incorporating the digital revolution

The digital revolution is driving profound transformations, including in the field of assessing students’ competences. In 2015, standardised assessment programmes began their shift to a digital format. Today, at secondary education level, assessments are all carried out on a computer and involve almost two million students each year. In primary education, the situation is more complicated, due to poorly suited equipment.

The measurement process is not distorted in respect of its principles, but technological change brings new challenges. Thus:

- switching from paper/pencil to digital poses questions regarding comparability and possible series breaks;

- students’ ability to use these new tools, even students’ familiarity with these new environments, is not well known;

- using digital technologies brings privacy or security issues.

However, in contrast, digital technologies offer very interesting features in the area of assessment (multimedia, accessibility, etc.), more sophisticated techniques (such as the possibility of introducing adaptive processes), interactive situations for more fun experiences (game-based), etc.

Finally, in the field of statistical analysis, these systems allow much more data to be collected, by recording the students’ actions (the students’ “tracks” or “process data”) (Box 3). These approaches are already enriching analyses and they will be very useful both for detailed individual feedback and for more precise statistics on students’ competency levels.

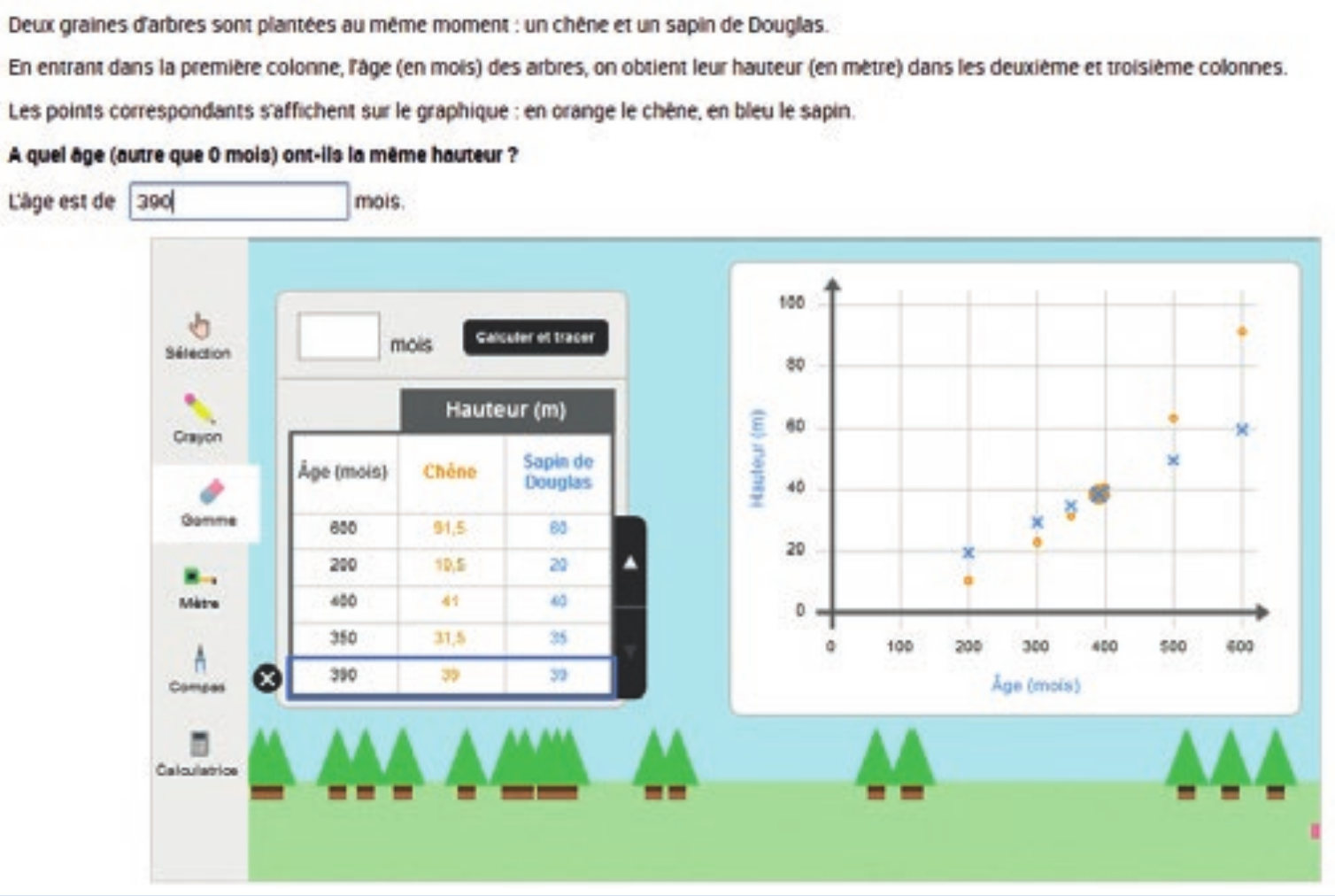

Box 3. Analysing students’ digital tracks

In this interactive item, students must complete a series of tests to determine the intersection point between two functions: by entering values in a table, which are plotted automatically on a graph. Students can use different digital tools (pencil, eraser, etc.). Based on the tracks left by their various actions, an analysis based on data science techniques makes it possible to identify the relevant cognitive profiles (Ouvrir dans un nouvel ongletSalles et al., 2020). It is important to note that this study is not data driven* – an approach that is often doomed to fail in this field – but has relied on a theoretical educational framework that guided the analysis process.

Sources: Assessment of the common base of skills at the end of year 10.

*“Data-driven management” would involve contextualising or customising the tool to the student according to their characteristics.

Paru le :19/02/2024

The debates generated by media coverage of the results of the OECD PISA (Programme for International Student Assessment) surveys are the most obvious example of this.

Alfred Binet (1857–1911) was a French educator and psychologist. He is known for his crucial contribution to psychometrics.

“The sociology of quantification can be presented as perpetually stretched out between two conceptions of statistical operations, a “metrological realist” conception (the object exists before its measurement) and a “conventionalist” conception (the object is created by the conventions of quantification: for example, poverty rate, unemployment, intellectual quotient or public opinion).” (Desrosières, 2008).

The DEP was more recently changed to the Directorate of Evaluation, Forecasting and Performance Monitoring (direction de l’évaluation, de la prospective et de la performance – DEPP), which is the Ministerial Statistical Office.

The Organic Law on Finance Laws (loi organique relative aux lois de finances – LOLF) established the framework for French financial laws. Enacted in 2001, it has applied to the entire administration since 2006 and aims to modernise the way in which the State is managed. It has promoted the development of tools to assess results, across all areas of administrative action.

Programme for International Student Assessment, conducted by the Organization for Economic Co-operation and Development (OECD).

Progress in International Reading Literacy by the IEA (International Association for the Evaluation of Educational Achievement) based in the Netherlands.

Trends in International Mathematics and Science Study (TIMSS) is an international survey of academic abilities in mathematics and science conducted by the IEA.

The certificat d’aptitude professionnelle is a French diploma in secondary education and vocational studies.

Local education officers, academic directors of the services of the National Education System (directeurs académiques des services de l’Éducation nationale – DASEN, formerly Academy Inspectors) or National Education System inspectors (inspecteurs de l’Éducation nationale – IEN).

For work in French on test theory, see (Laveault and Grégoire, 2002).

This is called “usability” in computer ergonomics, for example.

Pour en savoir plus

BAUDELOT, Christian et ESTABLET, Roger, 1989. Le niveau monte. Éditions du Seuil. ISBN 2-02-010385-0.

BRET, Anaïs, GARCIA, Émilie, ROCHER, Thierry, ROUSSEL, Léa et VOURC’H, Ronan, 2015. Ouvrir dans un nouvel ongletRapport technique de CEDRE, Cycle des Évaluations Disciplinaires Réalisées sur Échantillons. Sciences expérimentales 2013, Collège. [online]. February 2015. MENESR-DEPP. [Accessed 18 November 2021].

COLMANT, Marc et LE CAM, Marion, 2020. TIMSS 2019 – Ouvrir dans un nouvel ongletÉvaluation internationale des élèves de CM1 en mathématiques et en sciences : les résultats de la France toujours en retrait. [online]. December 2020. MENESR-DEPP. Note d’information n°20.46. [Accessed 18 November 2021].

DESROSIÈRES, Alain, 2008. Ouvrir dans un nouvel ongletGouverner par les nombres, L’argument statistique II. [online]. Presses des Mines. [Accessed 18 November 2021].

EVAIN, Franck, 2020. Indicateurs de valeur ajoutée des lycées : du pilotage interne à la diffusion grand public. In: Courrier des statistiques. [online]. 31 December 2020. Insee. N°N5, pp. 74-94. [Accessed 18 November 2021].

FALISSARD, Bruno, 2008. Mesurer la subjectivité en santé – Perspective méthodologique et statistique. 2e édition. Éditions Elsevier-Masson, Issy-les-Moulineaux. ISBN 978-2-294-70317-1.

GARCIA, Émilie, LE CAM, Marion, ROCHER, Thierry et alii, 2015. Ouvrir dans un nouvel ongletMéthodes de sondage utilisées dans les programmes d’évaluation des élèves. In: Éducation & Formations. [online]. May 2015. MENESR-DEPP. N°86-87, pp. 101-117. [Accessed 18 November 2021].

GOLDBERG, Milton et HARVEY, James, 1983. Ouvrir dans un nouvel ongletA Nation at Risk: The Report of the National Commission on Excellence in Education. In: The Phi Delta Kappan. [online]. Vol. 65, n°1, pp. 14-18. [Accessed 18 November 2021].

GOULD, Stephen Jay, 1997. La mal-mesure de l’homme. Éditions Odile Jacob. ISBN 978-2-7381-0508-0.

KOLEN, Michael J. et BRENNAN, Robert L., 2004. Test Equating, Linking, and Scaling: Methods and practices. 3e édition. Éditions Springer-Verlag, New York. ISBN 978-1-4939-0317-7.

LAVEAULT, Dany et GRÉGOIRE, Jacques, 2002. Introduction aux théories des tests en psychologie et en sciences de l’éducation. 3e édition, January 2014. Éditions De Boeck, Bruxelles. ISBN 978-2-804170752.

LE CAM, Marion et SALLES, Franck, 2020. TIMSS 2019 – Ouvrir dans un nouvel ongletMathématiques au niveau de la classe de quatrième : des résultats inquiétants en France. [online]. December 2020. MENESR-DEPP. Note d’information, n°20.47. [Accessed 18 November 2021].

NINNIN, Louis-Marie et PASTOR, Jean-Marc, 2020. Ouvrir dans un nouvel ongletCedre 2008-2014-2019. Mathématiques en fin d’école : des résultats en baisse. [online]. September 2020. MENESR-DEPP. Note d’information n°20.33. [Accessed 18 November 2021].

NINNIN, Louis-Marie et SALLES, Franck, 2020. Ouvrir dans un nouvel ongletCedre 2008-2014-2019. Mathématiques en fin de collège : des résultats en baisse. [online]. September 2020. MENESR-DEPP. Note d’information n°20.34. [Accessed 18 November 2021].

OCDE, 2020. Ouvrir dans un nouvel ongletPISA 2018. Technical Report. [online]. [Accessed 18 November 2021].

PIÉRON, Henry, 1963. Examens et docimologie. 1st January 1963. Presses universitaires de France.

ROCHER, Thierry et HASTEDT, Dirk, 2020. Ouvrir dans un nouvel ongletInternational large-scale assessments in education: a brief guide. [online]. September 2020. IEA Compass: Briefs in Education, Amsterdam, n°10. [Accessed 18 November 2021].

ROCHER, Thierry, 2015a. Ouvrir dans un nouvel ongletMesure des compétences : méthodes psychométriques utilisées dans le cadre des évaluations des élèves. In: Éducation et Formations. [online]. May 2015. MENESR-DEPP. N°86-87, pp. 37-60. [Accessed 18 November 2021].

ROCHER, Thierry, 2015b. Ouvrir dans un nouvel ongletPISA, une belle enquête : lire attentivement la notice. In: Administration et Éducation. [online]. Association Française des Acteurs de l’Éducation. N°145, pp 25-30. [Accessed 18 November 2021].

ROZENCWAJG, Paulette, 2011. Ouvrir dans un nouvel ongletLa mesure du fonctionnement cognitif chez Binet. In: Bulletin de psychologie. [online]. 2011/3, N°513, pp. 251-260. [Accessed 18 November 2021].

SALLES, Franck, DOS SANTOS, Reinaldo et KESKPAIK, Saskia, 2020. Ouvrir dans un nouvel ongletWhen didactics meet data science: process data analysis in large-scale mathematics assessment in France. [online]. 29 May 2020. IEA-ETS Research Institute Journal, Large-scale Assessments in Education, 8:7. [Accessed 18 November 2021].

THÉLOT, Claude, 1992. Que sait-on des connaissances des élèves ? October 1992. Les Dossiers d’Éducation et formations. N°17.

TROSSEILLE, Bruno et ROCHER, Thierry, 2015. Ouvrir dans un nouvel ongletLes évaluations standardisées des élèves. Perspective historique. In: Éducation et Formations. [online]. May 2015. MENESR-DEPP. N°86-87, pp. 15-35. [Accessed 18 November 2021].