Courrier des statistiques N7 - 2022

SSPCloud: a creative factory to support experimentations in the field of official statistics

The SSPCloud for the official statistical system is an environment for experimenting with new data science methods. It is a set of computer resources for creating prototypes, testing statistical processing and adopting new work practices. Inscribed in a Fab Lab type of inspiration, it provides the (im)material conditions to encourage the statistician’s creativity and help him or her make the most of new data sources. It is based on technologies of Cloud computing which reinforce the autonomy – and the responsibility – of users in the orchestration of their processing.

Built around a community open to all public statisticians, the SSPCloud is intended to be a learning workshop, where the statistical gesture is reinvented by many. Collaboration is facilitated by the adoption of open source solutions, guaranteeing the possibility of reuse. The SSPCloud offers a fertile mix of the two professional worlds of statistics and computer science, to progress more particularly in the implementation of processes that meet the standards of reproducibility applied to data processing and research work.

- An overhaul of the production system for Official Statistics...

- ... made possible by new IT capabilities

- The relationship between statisticians and IT is evolving, with more powers... and responsibilities

- Think outside the box to better seize upon exploratory opportunities

- From the shadow to the light: emergence of a new infrastructure for the Official Statistical Service

- Box 1. “It’s more fun to be a pirate than to join the navy” (S. Jobs)

- A creative factory, open for self-service

- Technological choices in favour of scalability

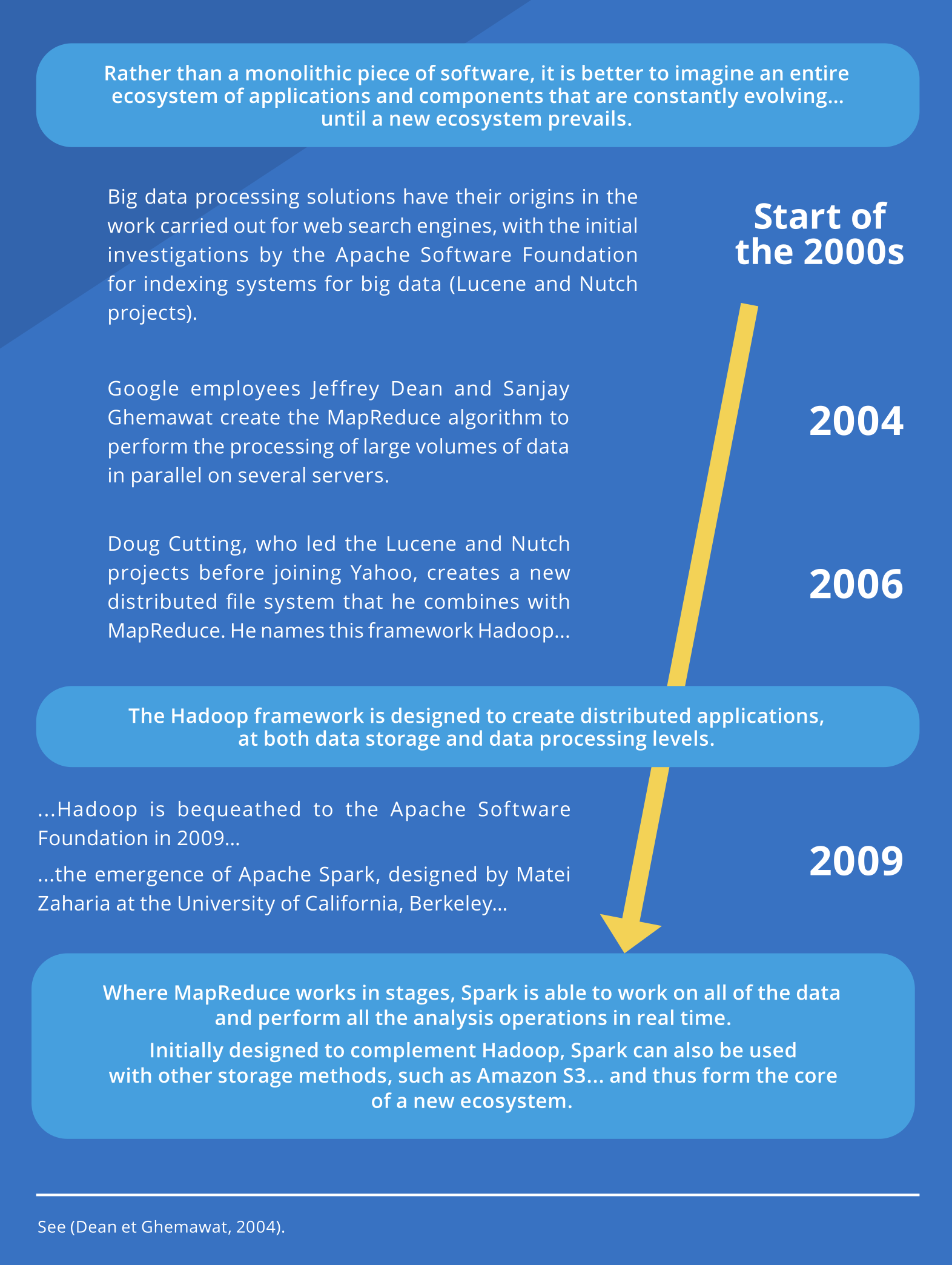

- Box 2. How the benchmark ecosystem may be dethroned in a few years

- An environment and resources accessible from anywhere

- Containerisation to manage execution conditions

- Box 3. Containerisation techniques increase the independence of data scientists

- Supporting statisticians’ IT practices

- Making everlasting what is no longer persistent

- Being autonomous in the orchestration of processing operations

- Making progress in the implementation of reproducible statistics

- Opening up and sharing of knowledge and know-how

- Benefit from sharing by choosing open source as a reference

- Build technical partnerships to inspire and be inspired

An overhaul of the production system for Official Statistics...

Following the conference entitled The European path towards Trusted Smart Statistics, dedicated to the emergence of a datafied society, the European Statistical System adopted the Bucharest Memorandum on 12 October 2018, setting out the principles of a major overhaul of the production system of Official Statistics in the different European Union nations. More specifically, these principles are aimed at providing the European Statistical System with the necessary capacities to take account of new data sources, computational paradigms and analytical tools, in a variety of dimensions, including the legal framework, the technical skills and the IT solutions for implementation (Ouvrir dans un nouvel ongletEurostat, 2018).

The notion of Trusted Smart Statistics thus encompasses a wide range of developments related to the proliferation of information sources about society in the broad sense, for which there are plenty of illustrations:

- understanding tension on the labour market by studying online job vacancies;

- accurately mapping the day-to-day, hour-by-hour movements of the population using mobile phone data;

- measuring energy vulnerability based on data from smart electricity and gas meters, etc.

These are examples of areas in which Official Statistics is called to invest in order to take advantage of information of a very different form and nature to survey data (UNECE, Ouvrir dans un nouvel onglet2013a; Ouvrir dans un nouvel onglet2013b).

These investments are also accompanied by innovations in the statistical processes, so as to be able to take advantage of the great potential of these new sources, but also to cope with their complexity or imperfections, which involve substantial processing to ensure statistical conformity. At the forefront of these innovations are so-called machine-learning methods and their promising uses in the coding and classification fields, data editing and imputation. The Machine Learning Project set up by the United Nations Economic Commission for Europe (UNECE) has thus highlighted, in its concluding report, the investments made to date in the Official Statistics community: “Many national and international statistical organisations are exploring how machine learning can be used to increase the relevance and quality of official statistics in an environment of growing demands for trusted information, rapidly developing and accessible technologies, and numerous competitors.” (Ouvrir dans un nouvel ongletUnece, 2021).

... made possible by new IT capabilities

Big data sources, which are at the heart of Trusted Smart Statistics, have characteristics that, due to their volume, their velocity (with their speed of creation or renewal) or their variety (structured but also unstructured data, such as images), make them particularly complex to process. Thus, information with characteristics that are such that it cannot be easily collected, stored, processed or analysed by traditional IT equipment is considered big data.

It is therefore necessary to establish a new type of IT infrastructure that makes it possible to anticipate, and even to inspire, innovative uses of Official Statistics driven by technological opportunities.

An IT infrastructure is made up of multiple strata, which are each key variables in the provision of expected services: data storage capacities according to different methods (file, object, database, etc.), processing power (RAM, CPU, GPU) or even processing services (software that makes it possible to execute languages such as R, Python, etc.), as well as technical orchestration architectures dedicated to linking these different strata. For example, distributed computing is a method of data processing based on the division of a single problem into a multitude of smaller problems, in order to solve each of these problems in parallel via a single data processing centre (by means of multithreading) or by spreading them across multiple data processing centres that are linked to each other (then called a cluster). The effective implementation of distributed computing requires the use of several software elements, also known as a framework, to manage access to the data, to distribute them among processing nodes, to perform all necessary analysis operations and to then deliver the results.

The relationship between statisticians and IT is evolving, with more powers... and responsibilities

These infrastructures are intended for data-processing professionals who, for their own purposes, need to develop technical building blocks and assemble them into an integrated process (or pipeline). Within its multiple meanings, the term “data scientist” reflects the increased involvement of statisticians in the IT development and orchestration of their data processing operations, beyond merely the design or validation phases. The new data science infrastructures take this expanded role of their users into account, giving them more autonomy than conventional infrastructures.

This widened user rights within IT infrastructure also corresponds to increased importance of the role of prototyping work in the activity of official statisticians, switching back and forth between the design of a statistical processing operation and its implementation. Indeed, statistical processing operations have always been evolutionary processes, which need to adapt in accordance with the data. However, this aspect is more significant in the case of data which, unlike survey files, do not natively have the expected qualities in terms of concept stability and measurement accuracy. For example, mobile phone data may include changes in location information related to triangulation methods based on the position of mobile phone antennas, the installation of which changes over the years. Interpretation of satellite or aerial images to establish statistical indicators, such as urban footprint, is based on photographs the quality of which depends on seasons or weather conditions. Likewise, analysis of online job vacancies can be conducted to infer elements relating to the skills required, with text fields containing heterogeneous content to be used by different recruiters to describe the same job.

Moreover, this type of data requires the use of evolving statistical processing operations based on learning algorithms rather than deterministic operations. The algorithm’s behaviour is thus designed to evolve as it “learns” the data that is sent to it and its orchestration requires the provision of appropriately engineered services and technical building blocks within the IT infrastructure. Statisticians must not only design the processing algorithm, but they must also play a role in its implementation, thereby acting in IT fields so far covered by System Administrators.

Think outside the box to better seize upon exploratory opportunities

In addition to structural IT projects, the highly evolving nature of new data sources also calls for the use of more flexible approaches, carried out in short time frames: based on trial and error, guesswork and intuition, these approaches favour opportunities and exploratory reviews of available possibilities, rather than building the optimal (but time-consuming) solution for a pre-defined objective. Such a prospecting paradigm is therefore based on experimentation with quick development of a minimal prototype in order to establish an empirical POC (proof of concept) without necessarily dealing with all facets of a subject.

However, experiments may encounter difficulty in accessing the resources needed to achieve them... as it is quite natural in risk-adverse organisation to restrict access to costly resources only to projects that, due to their preliminary studies, convey all guarantees sought by decision-making bodies. The rise of “laboratories” within large public and private organisations is one response to counter-balance the predominant role structural and long-term projects, by providing creative spaces that allow prototyping and experimentation to flourish. The main characteristic of a laboratory, or “lab”, is that it offers “another way of inventing” compared with the prevailing R&D process. For this purpose, it is, at least in part, outside the organisation’s usual operating rules and, therefore, outside the conventional decision-making procedures.

From the shadow to the light: emergence of a new infrastructure for the Official Statistical Service

Designed to meet the needs of data scientists, SSPCloud (Figure 1) is a new infrastructure set up by INSEE to offer, on the IT side, a “lab” environment conducive to engage applied experiments on new data sources and new processing methods and, more particularly, on distributed computing for big data, such as mobile phone data to calculate populations present at granular geographical scales (Suarez Castillo et al., 2020), and on learning methods, such as learning systems for the automatic coding of job titles in classifications.

Figure 1. To take a tour of SSPCloud

First created “outside the organisation” (Box 1), SSPCloud’s origins can be traced back to 2017, with the participation of an INSEE

team in the New Techniques and Technologies for Statistics (NTTS) hackathon. This

acted as a trigger: INSEE team managed to offer big data processing that incorporates

results on the fly, in the form of data visualisation, but encountered significant

technical limitations on available infrastructures. Pushing back technical limitations

as far as possible by delivering new IT data services will be the common theme linking

the work undertaken by a group of engineers from the Information System Directorate.

The opportunity provided by the availability of IT equipments, still performant but

nonetheless withdrawn from their initial use will, a few months later, lead to the

creation of the first functional prototype of a data science lab platform, which will

then evolve to become SSPCloud.

The system continued to evolve and expand, receiving multiple forms of internal and external institutional support and being opened up to the entire Official Statistics system in October 2020. Highlighting this commitment to openness while making reference to its underlying technological paradigm, the name “SSPCloud” was chosen for this new service shared between INSEE and the Ministerial Statistical Office.

Box 1. “It’s more fun to be a pirate than to join the navy” (S. Jobs)

IT and innovation in the shadows

These words by Steve Jobs help provide an understanding of one of the motivations for the emergence of “secret” innovation within an organisation. In examining the motivations behind innovation in companies and administrations, Donald A. Schon introduced the concept of bootlegging (Schon, 1963). Bootlegging is defined as research in which motivated individuals secretly organise an innovation process, without official permission from management, but for the benefit of the company. In the area of information systems, the same phenomenon has been observed with Shadow IT – a term referring to information systems that are created and implemented within organisations without approval from the managers of the information system.

Recognising the creative potential of employees and the need to welcome them, large organisations now aim to support “hidden” innovation, providing technical resources that can be used freely, without accountability (Robinson and Stern, 1997). Bringing this “innovation in the shadows” out into the light makes it possible to take advantage of the creative spirit of personnel in a more horizontal manner.

A creative factory, open for self-service

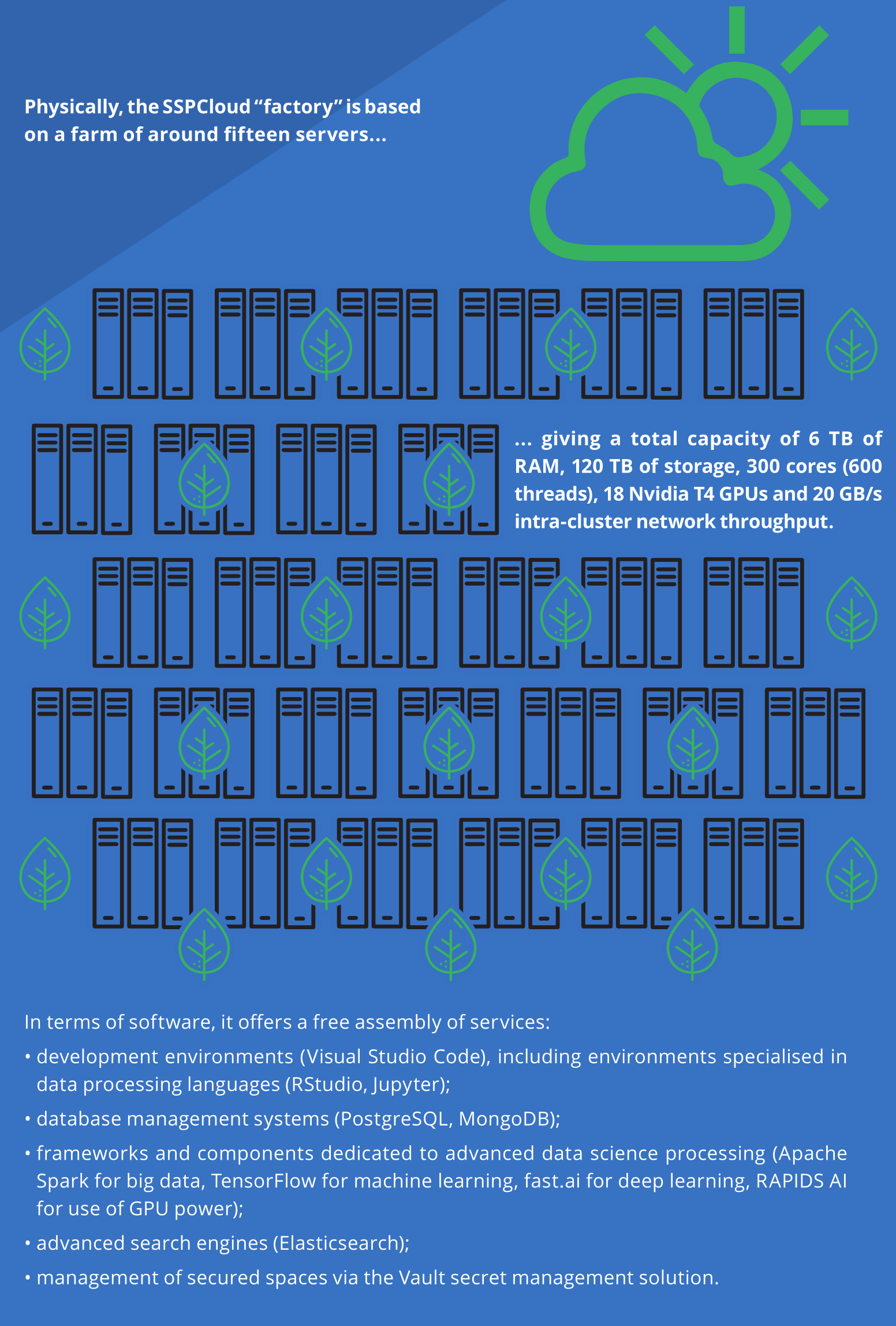

SSPCloud is presented as a “creative factory” or FabLab, for Official Statistics. As it is open for self-service, it provides direct access to all the hardware (such as GPU) and software (such as software frameworks and building blocks) that may be useful for data science activity (Figure 2). In SSPCloud, official statisticians have the necessary technical components to prototype and test out a data processing process, end-to-end.

Figure 2. SSPCloud as seen from the inside: in the mechanics of the cloud

Access to these resources is immediate: there is no need, for example, to request

provision of a storage space or a sandbox environment, as the whole SSPCloud architecture

allows the user to consume required items on a self-service basis.

Actions carried out on SSPCloud are not subject to regulation or monitoring: users are able to freely choose what they use without being constrained by a “deny list based reference framework” that would restrict the range of possibilities.

To ensure it remains a creative factory, SSPCloud is also constantly evolving, with the installation and continuous updating of new softwares. Users can help to expand the service catalogue and introduce new technical building blocks from which their peers can benefit. SSPCloud thus places statisticians at the heart of the design and development of its future statistical processes.

Technological choices in favour of scalability

In its technological stances, SSPCloud relies on an architecture responding to several sources of influence of contemporary IT.

The ability to scale processing operations (hence the term scalability) is a key expectation of data scientists, not only to enable them to store and manipulate big data, but also to ensure they have computational resources adapted to machine learning methods.

Addressing this topic requires distributing data processing across multiple data centres, using a server farm, for example – an approach which is at the heart of cloud computing. The service provider, also known as a cloud provider, provides on-demand physical resources (CPUs, memory, drives and GPUs) provided by remote servers, shared between multiple clients, so that the service can be re-scaled as needed, by sharing large sets of servers.

In order to be implemented, this approach also requires an adapted orchestration of the data processing operations, which was the aim pursued, in particular, in the development of the Hadoop framework. The main idea is the collocation of the processing and the data: if the source file is distributed across multiple servers (horizontal approach), each section of the source file is directly processed on the machine hosting that section, so as to avoid network transitions between servers.

SSPCloud has adopted a solution that follows on from this work, by using the Apache Spark framework, designed as a method to accelerate the processing of the Hadoop systems (Box 2). As a use case based on these principles, an Insee team has built in the first half of 2021 an alternative architecture to the one already in use to process sales receipt data in the context of creating the consumer price index. The acceleration of data processing operations by up to a factor of 10 was thus achieved, for operations that previously took several hours to perform.

An environment and resources accessible from anywhere

In a cloud infrastructure, the user’s computer becomes a simple access point for running applications or processing operations on a central infrastructure. SSPCloud was designed on the basis of this model, by enabling official statisticians to connect from any workstation, provided that access the Internet is granted. As it is independent of INSEE’s own infrastructures or those of the Ministerial Statistical Offices, SSPCloud does not require users to be connected to their administration’s local network, which also allows to grant access to people from other places (for example, members of European statistical institutes, academics, etc.). The SSPCloud infrastructure thus provides a so-called ubiquitous service, accessible anywhere, from any terminal with an Internet browser (computer, tablet, mobile phone, etc.).

Choice of data storage technologies was also driven by SSPCloud objective of scalability and ubiquity. SSPCloud is based on S3 (Simple Storage Service), so-called “object” storage – with an object consisting of a file, an identifier and metadata, all of which are not limited in size. Each data repository (here referred to as a bucket) is directly searchable with unique addressing (one URL per repository) and access services via API. Designed to offer adaptive scalability and optimised for running intensive calculations, S3 storage also provides interesting properties to facilitate data access: for example, it features an API for selection making it possible to include only a subset of a file in a request, even if the file is compressed or encrypted. Most importantly, it is a natural complement to architectures based on containerised environments, for which it provides a persistence layer and easy connectivity without compromising security, or even with strengthened security compared with a traditional storage system.

Containerisation to manage execution conditions

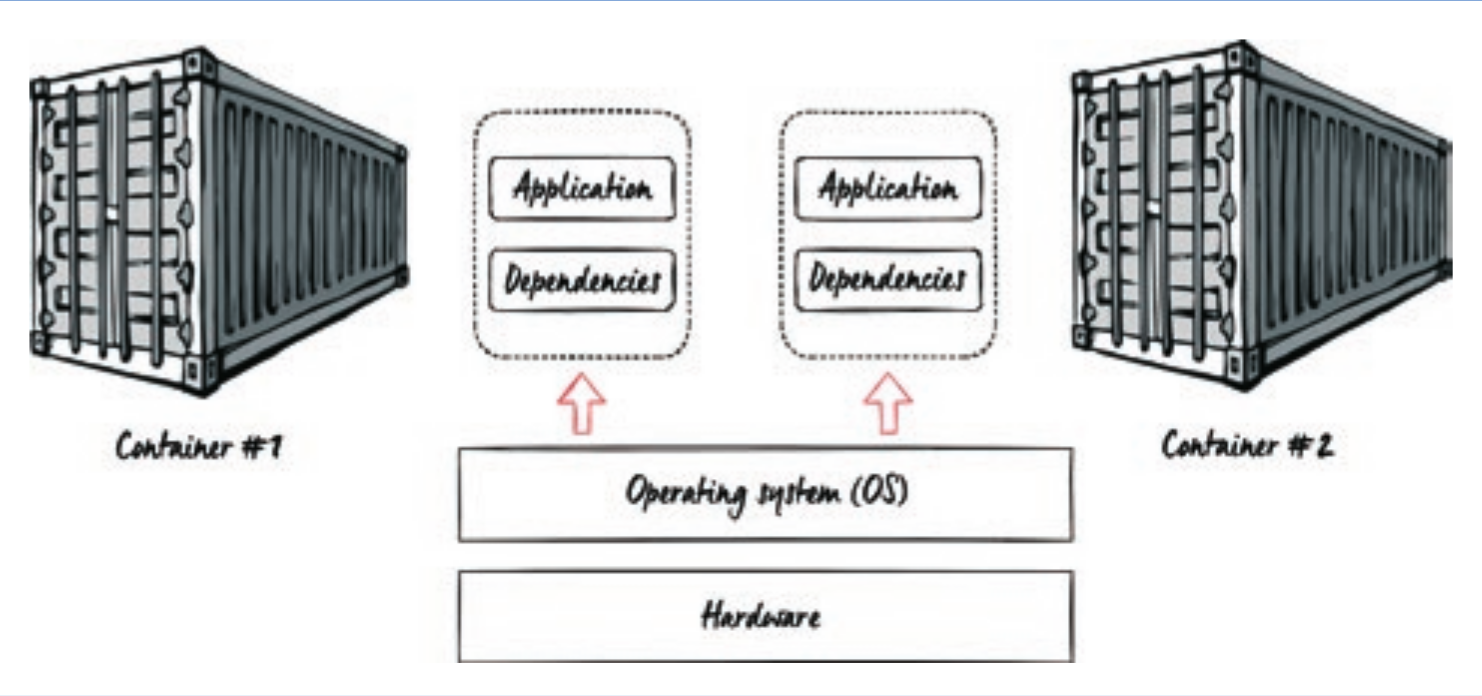

In IT environments, a container is a logical grouping of resources that makes it possible to encapsulate and run software packages, such as an application, libraries and other dependencies, in a single package. Containerisation (Box 3) provides a logical isolation system, addressing two major issues in data processing environments:

- it makes it possible to manage competition in access to physical resources (CPU and memory) between different users, by organising the allocation of capacities between containers;

- it ensures complete independence of the contents of each container, which makes it possible to construct a wide range of services and software without exposing users to compatibility issues between libraries.

Box 3. Containerisation techniques increase the independence of data scientists

An information system consists of services (web server, database, etc.) that run continuously and tasks that are scheduled to run regularly or on an ad-hoc basis. The code required to run services and tasks relies on an operating system, which organises access to physical resources (CPU, RAM, drives, etc.). In practice, the intervention of stakeholders specialising in the use of IT infrastructures is necessary to prepare and update the systems on which the code runs. For example, in the conventional organisation of an information system, the production and maintenance of code (full application or processing script) requires strong interaction between the code developers and the IT infrastructure users.

Containerisation offers an alternative by creating “bubbles” specific to each service, while using the same support operating system. This isolation ensures portability of the code from a development environment to the operating environment, while controlling all dependencies. Containerisation can be harmonised across a set of servers.

In order to allow users to request the execution of code, in complete autonomy, software sets then provide an orchestrator function: Kubernetes thus optimises the allocation of physical resources for a set of containers and facilitates their connection. This system manages the scalability of a processing operation, by duplicating a container to hold the load where necessary, for example. It also manages portability, moving a container to another resource group if necessary (other tools even allow users to move them from one computing cluster to another). These two characteristics make it possible to manage intense calculations with big data technologies.

SSPCloud corresponds to a paradigm known as infrastructure as a code.

The containerised environment is created only through the script specifications: data scientists can define their own working environment, the resources to be allocated to it, the software to be included (for example, R) and all the libraries useful for their processing operations (for example, R packages). The logic of SSPCloud is thus to propose that statisticians perform the design functions (writing out the processing operations), deployment functions (writing out the container that encapsulates the processing operation) and operation functions (writing out the container orchestration).

In the case of a dedicated environment for experimentation, the container mechanism makes it possible to manage a highly evolving range of services: indeed, unlike a monolithic central platform that requires each user to adapt their code to the upgraded version of the base software, a container device allows each user to manage the components they use. This system allows an entire processing operation to be repeated under controlled technical conditions.

SSPCloud is based on the use of containers, using the open source environment Kubernetes to deploy and manage the containerised services. This technological choice, which is perfectly in tune with cloud environments, also naturally meets scalability needs: the resource assigned to a container is configurable and complex processing operations can be distributed within a cluster and supported by multiple containers in parallel. As a result, environments dedicated to big data are now increasingly organised through the use of a combination of cloud computing and container orchestration. Big data frameworks, such as Apache Spark or Dask work in perfect synergy with containers: they break down big data processing operations into a multitude of small tasks and the container is undoubtedly the best way to deploy these small unit operations, by minimising the required resources.

Supporting statisticians’ IT practices

The set of technologies brought together within SSPCloud require an in-depth review of statisticians’ professional practices in their use of the computer environment. At the end of the 2000s, with microcomputing at its peak, many of the technical resources used by INSEE engineers were local, or at least were organised so as to require local access: statisticians located both their code and processing software on their machines, while data was accessed through a file-sharing system. To a certain extent, that environment led to an increase in the manual – and somewhat handwork and unsecured – management of processing operations by statisticians.

When, in a move to streamline IT infrastructures, multi-tenant processing systems were again favoured, these systems sought to preserve the user experience by providing access to a “remote desktop”. For example, the Unified Statistical Architecture (Architecture Unifiée Statistique – AUS), an internal INSEE data processing centre, provides a form of transition between local and centralised IT structures: it concentrates all resources on central servers, but creates one virtual desktop per user, which leads to them maintaining the same, largely manual and handwork, practice in creating their scripts and performing their processing operations.

The user experience within SSPCloud is radically different, meaning that it is imperative to take on other practices. Users do not have a remote desktop and must learn to operate with resources that, by design, are temporary and only engaged at the time they are actually in use. This framework allows the adoption of virtuous practices, to better separate the code, the data and the process, the persistence of which will be managed according to different technologies. Statisticians also need to learn how to manage workflow orchestration, within a framework that combines autonomy and automation. As a result, statisticians see their practices move closer to those of IT developers.

Making everlasting what is no longer persistent

The first transformation of statisticians’ practices concerns their ability to organise the persistence of elements they use in their data processing. In SSPCloud, all of the services are referred to as “non-persistent”: they are designed to be disabled when they are no longer used. Everything that has been developed within this service then disappears – specifically, all resources designed within a container will be erased when the container is deleted – unlike what happens on a local machine where users keep track of files in their storage space, as is also the case with a remote desktop that is supported by a file directory.

SSPCloud users must ensure that they organise the lastingness of the resources they create, beginning with their computer program. The functions of a version control tool like Git, used with a collaborative forge, enable statisticians to manage their code outside the environment in which they are working and to use it from any terminal. The use of Git also makes it possible to increase the traceability of processing operations and archive them as they are designed, thereby participating in the continuous improvement of statistical processes. Other services can then be supported by the source code repository, to “build” the statistical pipeline, test its integrity and produce its modules – this is the primary function of a software forge, a software engineering must-have which is also becoming a crucial element in the work environment of statisticians. SSPCloud is intended to work in conjunction with software forges – it contains a private version of the GitLab software and also allows users to use the services of the public versions of GitHub and GitLab.

Being autonomous in the orchestration of processing operations

The second transformation brought by SSPCloud concerns orchestrating processing operations. With SSPCloud, users are required to build the technical environment that suits their needs themselves, without calling on IT teams. To that end, they will need to specify the different parameters that make up their working environment in a programmatic manner.

Given autonomy to users is possible thanks to the use of cloud technologies and containerisation. Indeed, cloud technologies are agnostic in respect of the services they deploy and containers can be created at any time by users, who personally define the content and allocated resources. The fact that, in just a few seconds, statisticians are able to deploy databases, a computing cluster and a statistical processing environment, which they have chosen or even designed themselves, allows them the greatest possible creativity.

This approach also allows users to design the orchestration of a suite of elementary operations. Thus, statisticians can design a sequence of tasks such as, for example, retrieving data from the Internet every night, extracting relevant information from them, storing them in data tables and populating an interactive application that will be updated and deployed automatically.

As an example, this logic, driven to its end, enabled a team of INSEE statisticians to deploy a distance-calculation service (for calculating distances by road) that could be used by the SSPCloud community as a tool for spatial analyses. They thus simultaneously transformed data, independently deployed a server with an API for calculating road distances and, finally, developed and deployed an interactive application allowing users to calculate distances between different locations. Remarkably, no intervention by IT personnel was required to perform any of these tasks.

Making progress in the implementation of reproducible statistics

The challenge of ensuring full control of the execution context is equally applicable to study activities as it is to statistical production activities. For example, in the case of academic work, it is required for publications based on the use of data to meet a new scientificity criterion: the possibility for a third party to reproduce exactly the published set of results.

Achieving this reproducibility therefore requires solutions designed for data processing that can be repeated, on the one hand, and shared with third parties, on the other. Container technologies meet this expectation perfectly by describing all the resources of a set of processing operations in a standardised manner, then publishing that description on open registries (for example, container registries) along to the source code of a processing operation (for example, on a public software forge like GitHub or GitLab).

This requirement is gradually spreading throughout the world of Official Statistics, which is already keen on accountability for indicators’ production processes through methodological documentation and quality reports. Where official statisticians were required to provide passive documentation for each process stage, in order to account for compliance, data scientists are now providing active documentation, in which the actual description of the tasks to be carried out will be conducted in a way that automates their implementation. The ability to describe processing environments in a programmatic manner, with containerisation “contracts”, is at the heart of this approach.

Opening up and sharing of knowledge and know-how

In addition to being an IT infrastructure, SSPCloud was designed as a virtual “place” to facilitate meetings between stakeholders with different skills and experiences. Access to SSPCloud from the Internet means it can be accessed by the entire Official Statistics system – and, more broadly, by all stakeholders who want to be part of a knowledge-sharing movement.

As a symbolic date for this collaborative effort, the pre-launch of SSPCloud took place on 20 March 2020, at the very beginning of the first lockdown related to the pandemic crisis, a period in which many civil servants had no access to their administrative working environment. At that time, the test version of SSPCloud was intended for all civil servants, regardless of the government department to which they belonged, to provide them with a space for remote training in data science software.

Covering many discovery topics (introduction to statistical & IT languages) as more advanced uses (machine learning, distributed computing), the SSPCloud educational space immediately found its audience, by simultaneously providing tools for the Spyrales community, a group of civil servants engaged in learning R and Python. In particular, the container system makes it possible to easily offer interactive tutorial systems, combining software, exercises, data sets and sample programs, in customised environments, in the form of notebooks. At the same time, SSPCloud became the virtual ground for hosting educational events, organised remotely in 2020 and 2021, such as a serious game for training in R.

Benefit from sharing by choosing open source as a reference

Expanding the audience benefits innovation more generally, by facilitating cooperation between teams that otherwise operate in sealed-off information systems.

The rise of open source has provided a legal framework and a working method to support this desire to share. Statistics have been impacted by the rise of free software over the last 20 years, with R and now Python becoming the reference languages for statistics instead of, for example, SAS®.

Beyond the use of open source languages and software, statisticians have more generally become more accustomed to sharing their programs: for example, the publication of an article proposing a new method of data processing is now almost always accompanied by the publication of a library in R or Python implementing their methods. Thus, the sharing of statistical knowledge is done in two ways, both scientifically and technically.

SSPCloud has based its services exclusively on open source building blocks – statistical software, database management systems, development tools, etc. This means ensuring that work on the SSPCloud can be transferred to any other data science environment, with no ownership barriers, where the introduction of commercial software inevitably leads to limited reuse opportunities.

Emblematically, the SSPCloud graphical interface is itself the fruit of a collaborative open source project developed by INSEE. This software project, entitled Onyxia (Figure 1), allows users to launch their services, manage their data repositories and set up their resources. It is designed to be deployed on other infrastructures using container technologies and can be reused by other IT departments that want to build services of the same nature.

Build technical partnerships to inspire and be inspired

Faithful to its purpose as a creative factory, SSPCloud is intended to be a touchstone to help statisticians discover new technologies, as well as to allow IT engineers to devise new architectures for data processing.

SSPCloud acts as a sandbox for both its end users and its designers, keeping a watch on new technical opportunities. The SSPCloud team thus aims to build technological partnerships with third parties, both at the level of the European Statistical System and with other stakeholders specialised in data manipulation and use (research centres, observatories). The whole project, which is in open source format, makes it possible to be reused by future partners, creating their own versions while helping to improve the overall design of SSPCloud through their feedback. For example, Eurostat set up an experimental data processing environment based on the SSPCloud shared source code, reinforcing the community approach that is at the very heart of the SSPCloud project. team.

Paru le :19/02/2024

For example, for a characterisation of big data, readers can consult publications from the National Institute of Standards and Technology (NIST) Big Data Public Working Group (Ouvrir dans un nouvel ongletNIST, 2017).

See the two models for the strategic alignment of an organisation (Ouvrir dans un nouvel ongletAnderson and Ventrakaman, 1990): a “top-down” alignment of IT solutions based on area process needs and a “bottom-up” alignment of area processes that seize upon transformation opportunities enabled by IT developments.

Central Processing Unit, usually refers to the processor of a computer.

Graphics Processing Unit.

“Data scientists [...] perform complex tasks in the processing of data. They are capable of processing a variety of data and of implementing optimised algorithms for classification and prediction in relation to digital, textual or image data. [...] Data scientists use programming tools and need to know how to optimise their calculations to make them run quickly by making the best use of IT capabilities (local server, cloud, CPU, GPU, etc.)” (Ouvrir dans un nouvel ongletDINUM and INSEE, 2021).

“Open labs are a place and an approach used by various stakeholders, with a view to freshening up innovative and creative procedures through the implementation of collaborative and iterative processes that are open and result in a physical or virtual materialisation” (Ouvrir dans un nouvel ongletMérindol, Bouquin, Versailles et al., 2016).

The process received the gradual support of the Institute, with the creation of the INSEE statistical labs (SSP Lab) and IT labs (Innovation and Technical Instruction Division), which include the personnel who worked to build this experimental ecosystem.

SSPCloud also received funding through the Bercy Ministerial Transformation Fund in 2019 and from three general interest entrepreneurs, following a successful call for projects from the Digital Directorate in 2020.

In the collective imagination, laboratories are full of tubes, reagents of all kinds, sophisticated machines, sometimes disconcerting contraptions, unexpected reactions, etc. The term FabLab makes it possible to remind people of the role of this experimental material in SSPCloud and the creations that may arise from the free use thereof.

Provided that they comply with the general conditions of use. These relate to the type of data that can be processed on SSPCloud. For further information, see the General Conditions of Use for SSPCloud (Ouvrir dans un nouvel onglethttps://www.sspcloud.fr/tos_fr.md).

The system should help people to imagine, tomorrow, what the future statistical production chains might be. To borrow the image of a chemical laboratory, it is necessary to work not only on the discovery of a new molecule, but also on the process of obtaining it on an industrial scale.

In computer hardware and software and in telecommunications, expandability or scalability refers to the ability of a product to adapt to a change in the order of magnitude of demand, in particular its ability to maintain its functionality and performance in the event of high demand.

The Hadoop framework was published by Doug Cutting and Yahoo in the form of an open source project in 2008, inspired by the work of Google (Ouvrir dans un nouvel ongletDean and Ghemawat, 2004).

See Leclair (2019).

In 2006, Amazon Web Services launched an online storage service based on a new technology called Amazon S3. The open source software Minio allows users to build and deploy an S3 service without the involvement of Amazon.

A URL (Uniform Resource Locator) is a chain of characters that makes it possible to identify a World Wide Web resource by its location.

An API (Application Programming Interface) is a standardised set of methods by which software provides services to other software. APIs are involved when an IT entity seeks to act with a third-party system and such interaction is done in accordance with the access constraints defined by the third-party system.

Unlike HDFS storage, which relies on collocation of data and processing units, S3 allows containers to be freely linked with data that are not physically supported. This logic is relevant for processing operations that require the allocation of a great deal of computing power dynamically, such as in the case of machine learning.

The Kubernetes environment was created in Google Cloud, where it was developed and published in open source format in 2014.

While a virtual machine (VM) system requires the installation of a complete operating system for each VM, containers are much lighter units that share a single operating system kernel.

Git is a piece of version management software that keeps track of all changes made to a code for all contributors. It works in a decentralised manner: the computer code developed is stored on the computer of each of the project’s contributors and, if necessary, on dedicated servers.

A forge is a set of work tools that were originally designed for software development and are useful more generally for other types of projects, such as writing statistical codes. Designed for work involving multiple people, a collaborative forge allows for the organisation of contributive processes involving multiple stakeholders.

Through its catalogue of services, SSPCloud provides standard tools that meet the majority of needs. However, users can also design and share their own tools. They are thus free to test a new open source piece of software or library.

See also Box 3.

The notion of infrastructure refers to the idea of a passageway (the highway) where users follow each other; the notion of place refers to a meeting place (the town square) where users meet.

The Spyrales community was formed in March 2020 to help civil servants in their training in the languages R and Python by facilitating the connection of persons interested in being trained and tutors/trainers and by cataloguing educational resources.

Designed on the basis of “serious gaming”, FuncampR offers a fun approach to learning R. In a video game, trainees are required to solve puzzles, the answers to which are provided in tutorials on R. FuncampR is an online training module deployed on SSPCloud using container technology.

The detection of major errors in the calculations of an article by renowned economists Carmen Reinhart and Kenneth Rogoff, published in 2010 and leading to an erratum in 2013, called for higher academic standards for the scientificity of economic and statistical publications.

Echoing Karl Popper's criteria of scientificity, the latter insisted on the need to be able to confront theories with elements of verification, in the form of experiment. By extension, this means having verifiable elements to ensure the accuracy of calculations made by authors to demonstrate their thesis.

Pour en savoir plus

ANDERSON, John C. et VENKATRAMAN, N., 1990. Ouvrir dans un nouvel ongletStrategic alignment: a model for organizational transformation through information technology. [online]. November 1990. Center for Information Systems Research, Massachusetts Institute of Technology. CISR WP N° 217. [Accessed 16 December 2021].

DEAN, Jeffrey et GHEMAWAT, Sanjay, 2004. Ouvrir dans un nouvel ongletMapReduce: Simplified Data Processing on Large Clusters. [online]. OSDI'04, Sixth Symposium on Operating System Design and Implementation, pp. 137-150. [Accessed 16 December 2021].

DINUM et INSEE, 2021. Ouvrir dans un nouvel ongletÉvaluation des besoins de l’État en compétences et expertises en matière de donnée. [online]. June 2021. Insee, Direction interministérielle du numérique. [Accessed 16 December 2021].

EUROSTAT, 2018. Ouvrir dans un nouvel ongletBucharest Memorandum on Official Statistics in a Datafied Society (Trusted Smart Statistics). In : site d’Eurostat. [online]. 12 October 2018. 104e conférence des directeurs généraux des instituts nationaux statistiques (DGINS). [Accessed 16 December 2021].

MÉRINDOL, Valérie, BOUQUIN, Nadège, VERSAILLES, David W. et alii, 2016. Ouvrir dans un nouvel ongletLe Livre blanc des Open Labs. Quelles pratiques ? Quels changements en France ? [online]. March 2016. Futuris, PSB. [Accessed 16 December 2021].

NIST, 2017. Ouvrir dans un nouvel ongletNIST Big Data Program. [online]. Updated 11 January 2017. The National Institute of Standards and Technology (NIST). U.S. Department of Commerce. [Accessed 16 December 2021].

ROBINSON, Alan G. et STERN, Sam, 1997. Corporate creativity: How innovation and improvement actually happen. Éditions Berrett-Koehler.

SCHON, Donald A., 1963. Champions for Radical New Inventions. March-April 1963. Harvard Business Review.

SUAREZ CASTILLO, Milena, SEMECURBE, François, LINO, Galiana, COUDIN, Élise et POULHES, Mathilde, 2020. Que peut faire l’Insee à partir des données de téléphonie mobile ? Mesure de population présente en temps de confinement et statistiques expérimentales. [online]. 15 April 2020. Blog Insee. [Accessed 16 December 2021].

UNECE, 2013a. Ouvrir dans un nouvel ongletUtilisation des « données massives » dans les statistiques officielles. Note du secrétariat. [online]. 18 March 2013. Groupe de haut niveau sur la modernisation de la production et des services statistiques. Conférence des statisticiens européens des 10-12 juin 2013, Genève. [Accessed 16 December 2021].

UNECE, 2013b. Ouvrir dans un nouvel ongletBig data and modernization of statistical systems, Report of the Secretary-General. [online]. 20 December 2013. 45th Statistical Commission. [Accessed 16 December 2021].

UNECE, 2021. Ouvrir dans un nouvel ongletHLG-MOS Machine Learning Project. [online]. Updated 13 October 2021. [Accessed 16 December 2021].