Courrier des statistiques N4 - 2020

Microsimulation Models in a Statistical Office Why, How and to What Extent?

Microsimulation models are key to the analysis of tax and social transfer policies. This may involve static models, focused on the effect of transfers as at a given date, or else dynamic models, with this second type of modelling being essential for policies with long-term effects, as is the case with pension reforms.

Although these models are in widespread use, they are not automatically implemented in national statistical offices. The development of these tools within INSEE can be explained by the fact the institute produces not only raw data but also studies and projections. Analysing redistribution policies and forecasting their long-term impact is therefore firmly within its remit.

But microsimulation also offers interesting synergies with the actual production of statistics. So, we propose various avenues of development to strengthen the role of these models within the set of tools used to assess public policies.

- Microsimulation: Two Main Categories of Model

- Why Use These Methods?

- Use of Dynamic Microsimulation Gradually Became Imperative

- Why in a National Institute of Statistics?

- Box 1. DESTINIE: Three Contributions to Analysis of the Pension System

- The “How”: Which Sources?

- Box 2. The Question of Consistency With Aggregate Projections

- ...And Which IT Tools?

- Microsimulation at INSEE: To What Extent?

Microsimulation: Two Main Categories of Model

It is over twenty years since INSEE first began increasing its reliance on microsimulation and it is now also in widespread use in part of the Official Statistical Service and certain other administrations similar to INSEE, mainly in the social field. In the case of INSEE, two models coexist and have each gained a strong reputation in their respective fields: the INES model, applied to the study of tax and social transfers, and the DESTINIE model, for use in the field of pensions and other matters affected by the ageing of the population (Ouvrir dans un nouvel ongletBardaji et alii, 2003; Ouvrir dans un nouvel ongletBlanchet et alii, 2011). These models are representative of two types of microsimulation: static microsimulation in the case of INES, and dynamic microsimulation in the case of DESTINIE.

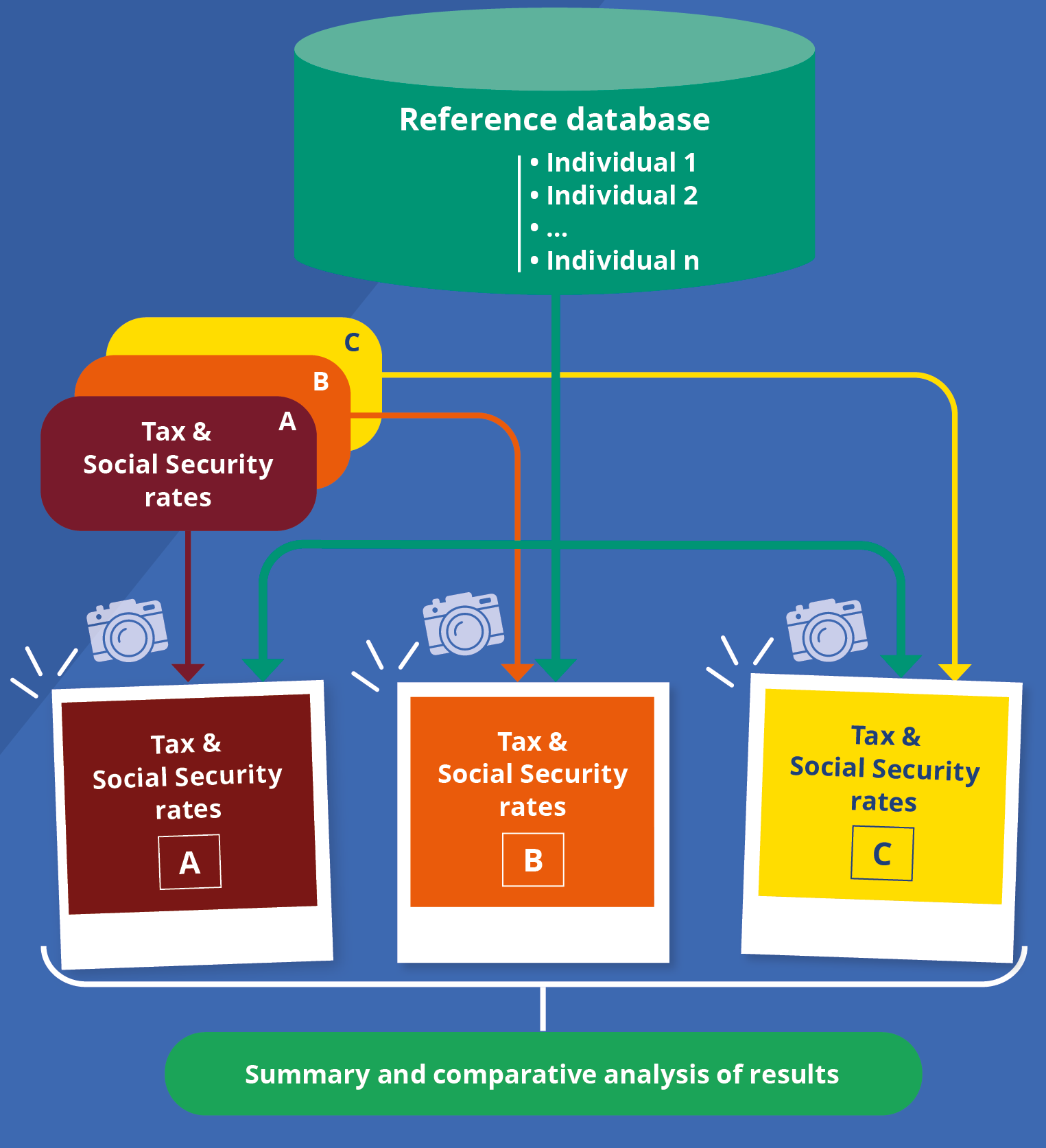

What do those two terms cover? Static microsimulation, applied to the tax system or social transfers, is based on a dataset of individual informations at a given date and involves applying formulas to these individuals’ income to calculate taxes and social security contributions or transfers. In this way, we count both the contributors and ultimate beneficiaries of these transfers and assess how their profiles might change as a result of modifications to these transfers. In its most rudimentary form, the approach therefore consists solely of the accounting process of applying statutory scales, which still does not stop these models from being hard to construct and to manipulate. They may potentially be enhanced by taking account of behavioural responses to modifications of these scales, e.g. changes in labour supply – which we will return to in conclusion – but nevertheless, the perspective remains a comparative static approach: we compare snapshots of the state of the world with and without these policy changes (Figure 1).

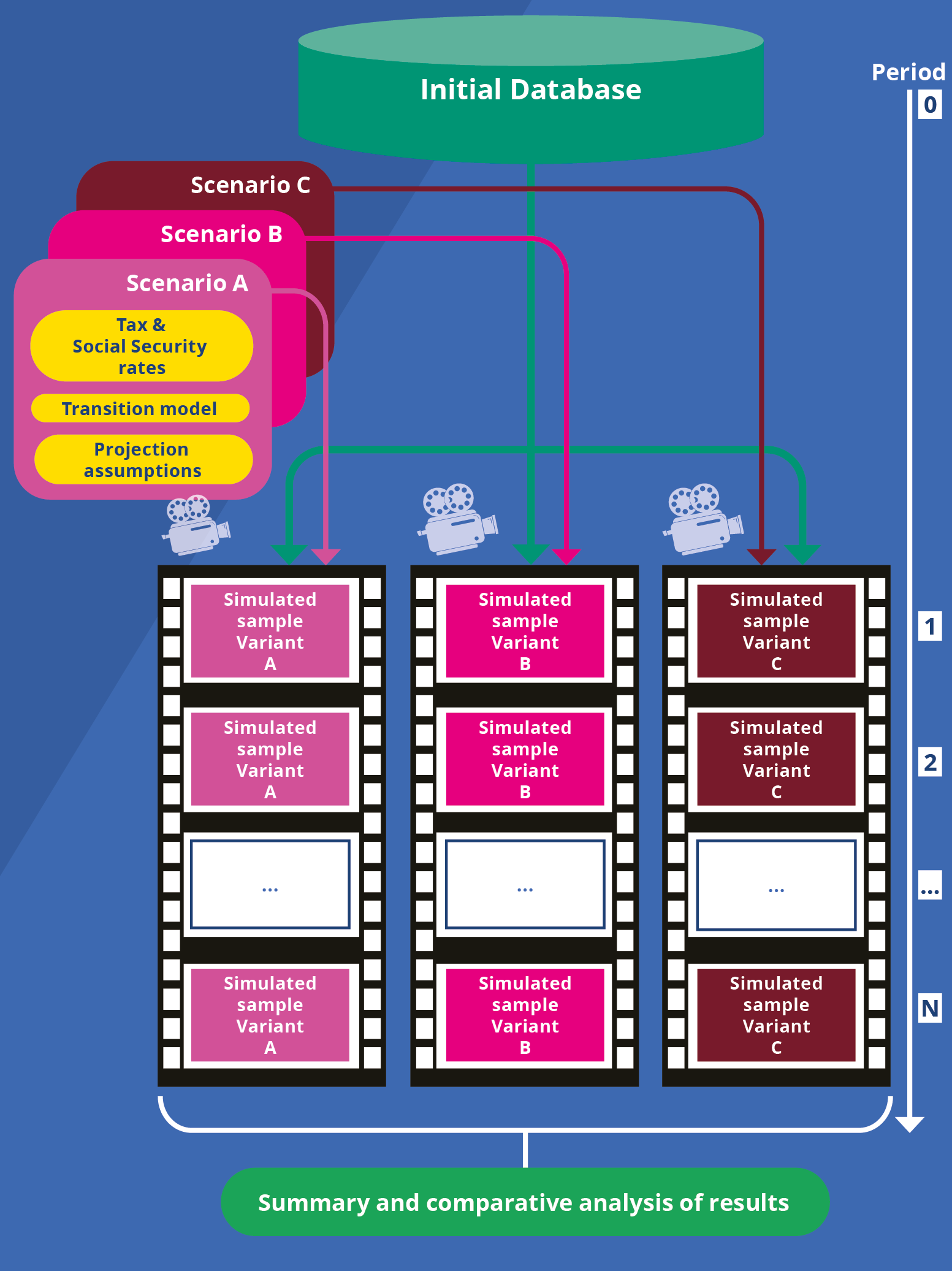

Dynamic microsimulation starts with the same type of group snapshot but the photo is just the first image on a film that will see the positions of individual people changing, including the disappearance of some of them through death, and the appearance of new individuals, ensuring the replenishment of the simulated population, as is the case in real life (Figure 2). In this way, DESTINIE and other models of this type simulate the ageing of people who have already retired, the career progression of people in employment who in turn go on to retire, and the arrival of new contributors. It does this by making assumptions about demographic behaviour, wage mobility and changes in status on the labour market as well as retirement behaviour, under the legislative frameworks that result from pension reforms already recorded, or from new reform scenarios.

Figure 1. Static Microsimulation Applied to Taxor Social Transfers

In static microsimulation, we compare "snapshots" of the state of the world with and without policy changes.

Figure 2. Dynamic Microsimulation Applied to Taxor Social Transfers

In dynamic microsimulation, we visualise change in the sample population over a given period. The different scenarios in the model thus determine as many versions of the "films" as can be compared or summarised.

Why Use These Methods?

Such techniques are used in numerous disciplines: demography, epidemiology, modelling of town planning systems or transport networks, etc. In the social and economic field, the original idea is generally attributed to Guy H. Orcutt, better known to econometricians owing to the auto-correlation correction method named after him, but who also designed, back in the 1950s, a global modelling project that was of a dynamic type from the outset: modelling that is representative of all change in the socio-economic system, change in individuals and the households to which they belong, but also change in the businesses that employ them and even in the public institutions interfering with the behaviour of all these economic actors (Ouvrir dans un nouvel ongletOrcutt, 1957). The idea was to differentiate from the macroeconomic models that were starting to be developed at that time, remembering the limitations of their reasoning in terms of broad categories of representative agents.

Orcutt’s goal was highly ambitious for its day. He tried to make it a reality throughout the rest of his career (Ouvrir dans un nouvel ongletWatts, 1991) but it was static models that were developed the most at first. Their usefulness in analysing tax and social transfer policies is indeed self-evident. People often came round to the idea spontaneously too, quite independently of Orcutt’s proposals. In this field, the aggregate approach of macroeconomic models is admittedly not without interest: they allow investigation of the impact of fiscal or social policy changes when their structures are not overly complex, with the advantage of taking account of general equilibrium effects, such as the impact of transfers on the balance between supply and demand or on the labour market. But they would never be capable of simulating the detailed effects of policies whose rules are dependent on a great many individual characteristics, very often in complex non-linear ways, for example owing to threshold and ceiling effects; the income tax scale is the classic example with which everyone is very familiar. That is what microsimulation models allow us to do.

Their symmetric limitation is that they only offer partial equilibrium analyses, as what happens to each agent is deemed to have no effect on the other agents and therefore on the rest of the economy. But they at least allow precise assessments of the so-called “first round effects”, before interactions, retroactions or behavioural reactions. It should be added that what justifies this use of microsimulation is not only the significance in regard to the redistributive effects: it is just as necessary for accurate assessment of the overall budget benefits or costs of these policies.

The motives were the same for constructing dynamic models applied to pensions, with DESTINIE simply switching to a practice that was already in widespread use in other countries. Planning the financing of a pension system and the pensions it delivers is possible without going down to a very fine level of granularity, as long as the objective is only to provide a first rough assessment of consequences of the ageing of the population. This was the procedure followed until the early 1990s, the period when the Ouvrir dans un nouvel ongletPensions White Paper (note) was written, with models that consisted of tacking a simplified representation of the pensions system onto traditional-style demographic projections, disaggregating the population on just the two criteria of age and gender. Proceeding in this way was sufficient to simulate the overall effects of ageing on pension equilibrium or the effects on this equilibrium of uniformly postponing the retirement age.

But the reform of 1993, which began addressing the questions raised by this White Paper, demanded a change of scale. Indeed, how can you simulate the impact of a pension calculation over the best 25 rather than 10 years without reasoning on an individual basis, bearing in mind the highly variable effects of this rule depending on type of career? And how do you simulate the impact of the move from 37.5 to 40 years of contributions to get a full-rate pension, without knowledge of the detailed distribution of contribution lengths within each generation? Increasing the number of investigations of typical cases would only have provided partial answers.

Use of Dynamic Microsimulation Gradually Became Imperative

There were some misgivings about answering these questions through use of microsimulation. In particular, there may have been some concern about the complexity and therefore lack of transparency of this type of instrument. There may also have been concern upon seeing projections rely on models whose results are affected by stochastic noise. Dynamic microsimulation in fact involves making changes to the situations of a sample of individuals through random drawings according to probabilities specified by the model, then having confidence in the law of large numbers to regard the results thus derived as giving an accurate picture of changes at more aggregate levels, but which will be fatally affected by simulation alea that does not exist in aggregate deterministic models.

In the face of this latest misgiving, the argument could be made that this form of imprecision was much less than other sources of uncertainty that affect any long-term forecast. Besides, there was something slightly paradoxical about dreading this element of random variability in the model when we are completely used to the fact that observing the present is also subject to sampling variability: we accept that the current unemployment rate is only measured to within a few decimal places, so why would this same margin of imprecision have been intolerable in regard to retirement expenditure projections to 2040? As for the lack of transparency, models built in this way are, admittedly, hard to adapt, and the criticism concerning opaqueness is therefore not entirely unfounded. But that would be to forget that the cost of the apparent transparency of simpler models is a greater number of simplifying assumptions with consequences that are just as lacking in transparency: the representative individual in macroeconomic models is an abstraction which, when used, can be seen to be far more opaque than aggregating simulations of thousands of individuals whom you have striven to ensure resemble individuals in the real world as much as possible.

Ultimately, the practice therefore ended up being accepted and taken on board. The answer to the question “Why use microsimulation?” is quite simply that there is no other way of proceeding to model complex systems.

But this does not answer the question of “Why in an institute of statistics?” For there are other natural hosts for this type of exercise, whether in the world of research or administrations in charge of the social welfare programmes concerned, for their short-term management and long-term forecasting requirements.

Why in a National Institute of Statistics?

Actually, microsimulation had also been developed in these other places before arriving in INSEE and continued to be developed there afterwards:

- In regard to static microsimulation, there was already the twin experience of the SYSIFF model at the Department and Laboratory for Theoretical and Applied Economics – DELTA (Bourguignon et alii, 1988) and the MIR model at the French Department for Forecasting. SYSIFF may be regarded as the precursor to the fiscal simulator developed by Landais, Piketty and Saez, which became the TAXIPP model now used by the Institute of Public Policies (IPP) (Ouvrir dans un nouvel ongletLandais et alii, 2011). The tradition that began with MIR continued at the French Treasury department, which currently has the SAPHIR model, while the French family allowance office, CNAF, developed its own model, MYRIADE, and ran it for a long time before finally joining in with the development and use of INES;

- In regard to dynamic microsimulation, it was mainly INSEE that was the pioneer for France, but the technique has spread within the country since then: initially at CNAV, the general regime providing the basic first pillar pension for wage earners in the private sector, with the PRISME model (Ouvrir dans un nouvel ongletPoubelle et alii, 2006), then at DREES with the TRAJECTOiRE model and at the Treasury with the APHRODITE model, before other pension schemes followed suit (the PABLO model for SRE, the state civil service pension scheme; the CANOPÉE model, developed by Caisse des Dépôts et Consignations (CDC) for the CNRACL scheme, and also a model currently in development at AGIRC-ARRCO). And finally, now the academic world has also taken it up, with the PENSIPP model from the IPP.

In most foreign countries, these are the two types of settings, academic and administrative, where these models have tended to be developed.

Why is France an exception? To be precise, we should point out that France is not the sole exception. Microsimulation has also been developed at Statistics Canada and Statistics Norway. In the case of France and Norway, this originality can obviously be explained by the fact that the National Statistical Institutes (NSI) in each of these countries has the dual task of producing statistics and studies. Microsimulation can therefore be regarded as having been developed at INSEE as part of its study and diagnostics mission.

But the argument requires some expansion and further explanation as it is quite clear from all of the above that one of the main uses of microsimulation models is for the ex ante simulation of reforms, for direct use in preparing economic policies. Yet this is an area in which public statistics is, in principle, forbidden to get involved: its role is to analyse and describe what is or has been, not to say or participate in the design of what should be. Might this weaken the position secured by these microsimulation models?

There are several arguments suggesting the opposite. They spring to mind very easily in relation to static microsimulation. It is certainly not INSEE that is in charge of simulating future tax reforms. The institute even avoids getting involved at too early a stage in relation to the most recent measures. However, for its task of diagnosing change in French society, it needs to assess the impact of past measures.

Microsimulation is an equally useful tool for ex ante assessment as well as for all types of ex post assessment, whether in the immediate aftermath or at a later stage. It is through microsimulation that we can most easily retrace in detail the contribution that past tax or social measures have made to the standard of living of the different population categories. It can do it accurately if one has comprehensive microeconomic data on income before transfers, and it also allows it to be done in advance, before these detailed data are fully available. It is in this way that INES helped meet the demand for advanced indicators of the breakdown of income and the poverty rate. Microsimulation here appears as one of additional instrument available for producing statistics. It can be seen as an extension to the practice of imputation, which is used to fill in temporary or permanent gaps in direct observation. Or it can be seen as an extension to social statistics of the model-based approach to flash estimates of other economic data, routinely used by national accounts: in both cases, the term nowcasting denotes this practice of combining partial information and models to give initial estimates of what is in the process of happening.

The argument may seem less convincing for dynamic microsimulation and pension projection. This clearly concerns exploration of the future and indeed a very distant future. However, exploration of the future is also part of INSEE’s portfolio of activities, or at least certain aspects of this future.

There are areas into which this exploration of the future does not venture further than in the very short term, as covered by the INSEE analysis of the economic situation in its Conjoncture in France publication, the Note de Conjoncture. The reason is that the institute considers its resources do not allow it to do anything better than a six-month forecast for variables known to be affected by high volatility.

But demographic phenomena demonstrate far greater inertia and respond to a very mechanical logic that makes it entirely possible to produce long-term forecasts or, to be more precise, “projections”, i.e. simulations of how the total workforce and structure of the population are likely to evolve depending on various potential mortality, fertility and migration scenarios.

When it comes to practising this style of forecasting, INSEE is certainly not the exception: the demographic projections for the European Union member states are most often produced within the various NSI. Moreover, INSEE has long been used to supplementing its total population projections with projections of the working population.

What DESTINIE does is therefore a natural extension to these very standard exercises; it even feeds into the second of these in regard to producing projections of behaviour concerning economic activity among the older age groups. This had long been done in the same way as for other age brackets, by logistic extrapolation from time series of the past activity rates. This method clearly became inappropriate once the process began of tightening up the age and length of career conditions for claiming a pension. A way had to be found of modelling how these revisions had begun and would continue to affect behaviour in regard to taking retirement. We come back to the reason cited in the introduction to explain how use of microsimulation has become essential to all work on pensions. The impact of the highly complex benefit computation rules can only be assessed through models that take account of the details pertaining to individual situations. This does not mean these models necessarily hold the truth to future behaviour but they at least make maximum use of the information that is available or that can be reasonably forecast.

In doing this, we have access to a tool that can provide answers to many other questions, and which remains within the scope of measurement without straying into recommendation (Box 1). Using DESTINIE to forecast the change in the relative standard of living of retired households and its dependence on assumptions about economic growth does not constitute contributing to the drawing up of future reforms, it is about shedding light on the effects of already established reforms. Using the individual trajectories that the model needs to reconstitute in order to assess the intragenerational redistributive properties of the current system is nothing other than an extension to the life cycle of the instant redistribution assessment exercises carried out by static means using the INES model.

Box 1. DESTINIE: Three Contributions to Analysis of the Pension System

The redistributive properties of the pension system may involve two channels: “non-contributory” rules, where redistribution is their raison d’être (minimum pension, validation of periods of unemployment, family entitlements, etc.); or the redistributive effects of the “contributory” core of the system: for instance, the 25 best years rule of this core system is deemed redistributive, as it smooths out the impact of career setbacks on total pensions entitlements. Here, DESTINIE helped reveal a more complex reality (Aubert et Bachelet, 2012). Taking the best 25 years into account benefits long or rising careers, which are not those leading to the smallest pensions. Hence the core of the system appears rather anti-redistributive, all the more so when differences in life expectancy are taken into account. It is non-contributory rules that are effective at soften the inequalities built up during working lives. This effect has been quantified (Dubois et Marino, 2015): for the 1960 to 1970 generations, going from bottom to top of the educational attainment scale, the “return” on social security contributions decreases from 1.5% to a little less than 1% for men, and from 2.5% to 2% for women, as family benefits, survivor’s pension entitlements and longer life expectancy compensate for their disadvantage in terms of pay and length of career.

Before the end of the 1990s, revalorisation of pensions or the wages included in its calculation, used to refer to changes in the average wage. The move to price indexation had two effects: past wages now represent a lower percentage of final salary, lowering the initial replacement rate and then, after the pension has been claimed, the level of pension continues to lose ground in relation to the average wage among the working population. DESTINIE showed to what extent this should contribute towards stabilising the pensions/GDP ratio (Marino, 2014): from about 11% at the start of the ‘90s, it would have risen to 20% in 2040, in the absence of any reforms. Price indexation brought this back down to around 16%. But it generates new dependency on growth assumptions: if there was a return to rapid growth, pensions would lose a lot of ground in proportion to wages. Conversely, stagnation in real wages and productivity would neutralise the move from wage indexation to price indexation. Can alternative indexation rules be found to allow a management of the relative level of pensions that is not subject to the uncertainties of economic growth? That is an issue that DESTINIE has also investigated (Dubois et Koubi, 2017), as well as, outside of INSEE, the PENSIPP model (Ouvrir dans un nouvel ongletBlanchet, Bozio et Rabaté, 2016). With or without the introduction of a unified system, the pension system’s sensitivity to macroeconomic shocks will inevitably be a major issue in the post COVID-19 period.

The stabilisation in the pensions/GDP ratio which was anticipated before the shock of COVID-19 will also result from the postponement of the retirement age (increase from 37.5 years to 43 years for the number of years of contribution needed to receive a full-rate pension, following the reforms of 1993, 2003 and 2014, and a rise in the minimum retirement age from 60 to 62 following the reform of 2010). This postponement has already led to an increase in the employment rate for 60-64 year-old and, if the system remains unchanged, should raise the average age for claiming one’s pension to about 64 in 2040. Another idea guided one of the aspects of the 2003 reform: the introduction of life expectancy indexation, with each quarter gained being split two thirds/one third between length of working life and length of retirement. DESTINIE served to confirm that the measure was not enough to stabilise this ratio: it has no effect for individuals who reach the minimum retirement age with the required years of contribution, nor for those with a short career who are forced, in any event, to claim their pension at the maximum age. The trend towards stabilisation in the ratio is rather linked to the rise in the retirement age to 62 (Aubert et Rabaté, 2015). In the absence of any other reforms, the ratio would remain stationary initially and would then resume growth after the length of career condition stabilises at 43 years, as planned for the 1970 generation.

The “How”: Which Sources?

There are other tie-ups, similarities and synergies with the production of statistics. Here we broach the question of “how”.

Depending on whether it is static or dynamic, a microsimulation model has two or three main components:

- one or more modules reproducing the legislation we are seeking to simulate;

- a database of individual data;

- and, in the case of dynamic microsimulation, one or more modules for forecasting individual situations, with their projection parameters.

Reproducing the legislation to be simulated does not, strictly speaking, come under the scope of statistics. At most, a microsimulation model may, where possible, rely directly on the IT tools for calculating entitlements, as used by the administrations in charge of applying this legislation.

The two other strands, however, are very much part of the task of producing statistics.

This is clearly the case in regard to building up the underlying database. So, the development of INES has been closely linked to the development of the tax and social revenues statistical system which has, itself, fed into the MYRIADE and SAPHIR models at DREES and the Treasury respectively. For dynamic microsimulation models, forecasting the future presupposes there are files containing sufficient information about the past.

The very first version of DESTINIE relied on the Household Wealth Survey, the only source at the time that gave retrospective information about the length of individuals’ past career, albeit in a somewhat crude form. DESTINIE’s requirements tipped the scales in favour of enhancing the source through gathering more detailed information on career history.

DESTINIE is still linked to the Household Wealth Survey, giving it the ability to make projections, not just for individuals but also for the households to which they belong. To forecast individual entitlements, however, administrative panels have the advantage, with far more comprehensive files and far more accurate data. So, the TRAJECTOiRE model is closely linked to the sample group of contributors to pension schemes called the Ouvrir dans un nouvel ongletEIC, set up and developed by DREES. Having a panel that just gives the past length of contribution is of only very relative use in providing findings about pensions, and only becomes fully relevant when supplemented with the probable length of future contributions in order to calculate the related entitlements: that is what TRAJECTOiRE brings to the EIC. The model is, to some degree, the logical and necessary extension to the panel on which it is based.

Turning then to the choice of projection parameter data, the retrospective data contained in the underlying panel may form part of this: such data provide information about the way individuals have behaved in the past, which is a basis for forecasting future behaviour. But the model is often likely to draw on other sources.

In DESTINIE, for example, the probability matrices used to forecast labour market transitions are derived from the Ouvrir dans un nouvel ongletGeneration Survey by CEREQ and from the EIC, while those governing change in demographic behaviour are based on data from INSEE’s Permanent Demographic Sample (EDP), combined with data from the Family, Labour Force and Training and vocational skills survey FQP. In their own way, aggregate projections may also feed into the model if we use them as tools for calibrating part of its results, offering at the same time a partial solution to the problem of the stochastic variability (see above) related to the model's “free” functioning (Box 2).

Box 2. The Question of Consistency With Aggregate Projections

Which calibration techniques should be used to consolidate the results of a microsimulation model and guarantee their consistency with the forecasts produced by traditional methods? Let’s take the example of births in a model applied to pensions:

baseline demographic projections are established by means of the so-called “method of components”, where the sole predictor of the birth event is the mother’s age: these projections determine the annual number of births as the sum of number of females by age, weighted by fertility rates;

in the microsimulation model, the mother’s age will also be a determining factor in the probability of a birth but there will be other additional explanatory factors: marital status, level of education and position in the labour market, the number of children she already has, and the time lapse since the birth of her youngest child. Ultimately, there is no guarantee that the simulated number of births will be consistent with the one derived from usual projection techniques.

There are two schools of thought when faced with such a discrepancy: either accept the difference, explaining that it is normal to have different results when using a different model, or seek to avoid apparent inconsistencies. This is possible by constraining individual relative probabilities of having a birth so that on average, they give the same number of events as the baseline projection. This technique is named “alignment”. It regards the microsimulation model as not necessarily being more effective than the standard projection at giving this total number, considering it is more a matter of conjecture than forecasting. In the case of births, the standard projection is based on conjectures about overall fertility. What the microsimulation model does is only to distribute these births across the female population, consistently with relative probabilities to have a child depending on the mothers’ characteristics.

The choice of free or “aligned” simulation must be assessed on a case by case basis. In DESTINIE, for instance, alignment on macro projections is used for projections of labour force participation rates between ages 25 and 55. Conversely, it is DESTINIE that takes the lead in assessing LFP rates over 55 or under 25. Here it is macro projections that derive from the microsimulation model rather than the other way round.

When used, such calibrations provide an answer to one of the misgivings about microsimulation: by forcing the number of events to comply with the macro targets, sampling variability is neutralised and therefore the noisy nature of the results. However, the remedy must be applied with care. If the “free” model spontaneously gives a number of events that is a long way off the target, forcing this number may distort some of the model’s other results, not subject to calibration, or even accentuate their instability.

A less risky way of reducing sampling bias, termed “noise reduction”, consists of forcing the simulated number of events to exactly match its expected value derived from the model’s individual probabilities, neutralizing the alea that would be generated by fully independent random drawings.

Lastly, by replicating a large number of the model’s independent simulations and calculating their average, it is even easier to reduce the noisy nature of its results. The drawback of this third option is that it increases computation time: the degree to which this is a problem depends of the model’s speed of execution.

...And Which IT Tools?

The question of “How?” also includes the matter of IT tools. For a long time, limited computer capacity prevented much progress being made in the implementation of Orcutt’s original programme. That is less and less the case now, of course, but in a way that remains highly sensitive to the software solutions adapted to construct the model.

In this field, we have not spontaneously witnessed the development of dedicated, optimised tools, similar to those that have been developed for macroeconomic modelling and statistical analysis. There is a simple reason for this: microsimulation is an exercise that does not call for highly advanced technical skill. Once the individual data files have been brought together and mastered, a good non-specialised language and reliable random number generators is all that is required to start. Other than that, building the model is just a matter of thoroughness and patience. In this context, the modeller’s natural tendency is to opt for the software or programming language with which they are most familiar, whatever that may be.

To take DESTINIE as an example once again, it had initially been written in Turbo Pascal, then made an aborted attempt at moving to C++, after which it fell back on Perl and ran for a few years under this language, before switching to a mix of R and C++. The move to R is consistent with the place this language is establishing for itself throughout INSEE. While R is the leading language for the rest of the statistical work and studies conducted at the institute, it seems quite natural to turn to it also for microsimulation, thus preventing the need for users to master two different languages. But R may not be the best choice in terms of computing time; this is where the switch to C++ for part of its code comes into play.

The speed of execution argument had also led the designers of MYRIADE to opt straight for the same C++ language, although it has since been abandoned, with CNAF going over to INES: this model is programmed in SAS, as is TRAJECTOiRE at DREES and PRISME at CNAV. But the question now arises as to whether INES should switch to R, as DESTINIE has done.

It is often a source of regret that this great variety of approaches leads many modellers to reinvent the wheel, or even reinvent it several times over when they move from one language to another. Although each model is specific, one feels there must indeed be building blocks that can be pooled. The calibration and alignment procedures mentioned in Box 2 are an obvious example, but there have been attempts to construct more systematic programming frameworks that should allow the modeller to concern themselves most of all with the contents of their model, while wasting the minimum amount of time on purely IT matters. Statistics Canada had therefore developed the MODGEN tool, written in C++. LIAM 2 is a more recent development that is enjoying some success. This software package is championed by the microsimulators at the Belgian Federal Planning Bureau Ouvrir dans un nouvel ongletde Menten et alii, 2014). This issue of choice of programming tool is therefore in the process of evolving.

As with the rest of statistical production processes, there is a balance to be struck between pooling and preserving a principle of subsidiarity, allowing the modellers to choose what seems to them to be the most appropriate for the model they want to construct.

Microsimulation at INSEE: To What Extent?

Having tackled the questions of “Why?” and “How?”, there remains the matter of future prospects. Are there new directions in which these tools could do well? At the risk of verging on utopianism, we could return to Orcutt’s original programme, but also develop the issue of links with other practices for assessing public policies, a matter to which INSEE contributes through other means besides microsimulation and with which synergies might be developed.

The Orcutt programme first of all. Dynamic microsimulation applied just to pensions is only one of several aspects in terms of what could be encompassed within this programme, and probably not what Orcutt had in mind at the time. Now in this specific area of pensions, DESTINIE has in fact lost some of its temporary advantage derived from its status as pioneer: it is less accurate than the models based on the administrative data of DREES, CNAV and other regimes that have now adopted the same kind of approach. On the other hand, however, it still has the advantage of simulating households, not just individuals, enabling it to make calculations about the standard of living and to study systems contingent upon the total income of these households not just of their individual members, taken separately. These specific features make it key to studying survivors’ pensions and it is also used for a subject that is separate from but very closely related to pensions: dependency and how it is covered, for which the household dimension is very important.

Without going as far as “total” social modelling, as envisaged by Orcutt, one avenue for development may therefore be to seek to make DESTINIE a key reference tool for use in long-term forward studies of living conditions and standards, considering them from many different angles. This can be done through the gradual construction of additional modules, as one of the method’s advantages is also the possibility of grafting additional modules onto it, making good use of what the core of the module simulates, and supplementing it with the simulation of related variables. A savings and household wealth module is therefore currently under construction, focused on financial assets, to which the simulation of property assets would be a natural addition. Other themes can be envisaged as being available to order, e.g. simulation of future exposure to energetic precariousness in the context of rising energy prices and environmental taxes, already dealt with in the French General Commission on Sustainable Development – CGDD within the static framework of the Prometheus model (Ouvrir dans un nouvel ongletThao Khamsing et alii, 2016).

To finish off, we turn to microsimulation’s position in regard to the assessment of public policies. This position is slightly paradoxical. Microsimulation is, by its very nature, a central part of any ex ante or ex post assessment system, and yet it enjoys little recognition from economists involved in public policy evaluation. For a majority of economists in this field, public policy evaluation means empirically measuring its impact on one or more variables of interest – the outcomes – by relying, ideally, on a controlled experiment or, failing that, on quasi-experiments, when sufficiently comparable population groups have been differentially exposed to the policy of interest, allowing them to play the roles of treated and control groups.

Experimental or pseudo-experimental approaches are, of course, important aspects of the assessment process, but reducing it to that makes no sense. Not all policies can be assessed in those terms. Favouring a single method of assessment means giving up hope of learning about a large number of subjects. Even for subjects for which this method is relevant, it only answers part of the question: knowing that a particular financial incentive altered the labour supply position for such and such a proportion of people does not, on its own, tell you about the measure’s cost-benefit ratio or its redistributive effects.

This therefore makes the case for acknowledging the complementary nature of the approaches (Ouvrir dans un nouvel ongletBlanchet et alii, 2016). The criticism regularly made about static microsimulation models is that they are most often purely accounting models, without any behavioural assumptions. Dynamic microsimulation models introduce behaviour, but often very mechanical or exogenous behaviour, such as when they are restricted to reproducing the probabilities of transition between states observed in the past. There is therefore scope for including behavioural assumptions, for which the parameters would be adjusted according to the results of ex post empirical assessments, but not only that, as we may also be called upon to forecast behavioural responses for which rigorous ex post assessment will not be available for a long time, if ever. The best example of this is the retirement age: we cannot wait for the reforms to have produced all their effects on the age for claiming a pension before incorporating these effects into the projection models.

Also remains the goal to have general equilibrium models that would not only include endogenous behaviour but also interactions between these behavioural reactions. In Orcutt’s plan, interactions and retroactions were taken into account through recursivity. At date t, the agents react independently of one another to the current state of the world that they observe around them, resulting, in the next instant, in a new state of the world to which they again react, and so on. In order for that to take account of the real world, we simply need to construct models with sufficiently fine time steps, where the means of calculating permit. Attempts have been made in France to apply this logic to the functioning of the labour market (Barlet et alii, 2010 ; Ouvrir dans un nouvel ongletBallot et alii, 2016), moving microsimulation towards another category of models, those that economics literature prefers to describe as agent-based models, to clearly mark the difference from a form of microsimulation deemed too focused on accounting.

It is too early to say to what extent this type of approach might be able to compete with traditional macro-econometric modelling, but macro-economists themselves are increasingly sensitive to the need to move away from their excessively aggregated representations: it is not just for statistical findings that it is necessary to go beyond the average; one may pose the same question for all modelling tools as a whole.

Paru le :15/09/2022

INES is short for “INSEE-DREES”, the two organisations that jointly developed the model (DREES is the Research, Studies, Evaluation and Statistics Directorate within the French Ministry for Solidarity and Health). See the article by Simon Fredon and Michael Sicsic in this same issue.

DESTINIE: this French acronym stands for “modèle Démographique Économique et Social de Trajectoires INdividuelles sImulÉes” [Social and Economic Demographic model of Simulated Individual Trajectories].

The April 1991 White Paper on Pensions presented all pension schemes, their development prospects and proposals for managing the effects of the ageing of the population.

i.e. the noise due to the fact that the simulation repeatedly draws lots, which is more than sampling variability, strictly speaking.

See Legendre, 2019 for this whole history and more systematic bibliographic references for all the models cited in this article.

Joint research unit formed by the ENS graduate school, CNRS National Centre for Scientific Research and EHESS School of Advanced Studies in Social Sciences.

See the article by Pierre Cheloudko and Henri Martin in this same issue.

CNRACL: Caisse Nationale de Retraites des Agents des Collectivités Locales, or pension fund for local authority employees.

AGIRC (General Association of Supplementary Pension Institutions for Managers) and ARRCO (Association for the Supplementary Pension Scheme for Employees) manage supplementary pension schemes.

INSEE produces a Conjoncture in France (Note de Conjoncture) in March, June and December, and an Outlook (Point de Conjoncture) in October.

EIC is an anonymous sample group of individuals that reconstitutes their entire careers, year after year, by bringing together data from different schemes. For more details, see the article by Pierre Cheloudko and Henri Martin in this same issue.

CEREQ, the French Centre for Research on Education, Training and Employment conducts a survey that allows reconstitution and analysis of young people’s career paths over the course of the first three years of their working lives.

The CGDD reports to the French Ministry for Ecological and Inclusive Transition. It is home to the Statistical Data and Studies Service (SDES) i.e. the statistical department for the ministries in charge of the environment, energy, construction, housing and transport.

Pour en savoir plus

AUBERT, Patrick et BACHELET, Marion, 2012. Disparités de montant de pension et redistribution dans le système de retraite français. In : L’Économie française – Comptes et dossiers, édition 2012. [online]. 4 July 2012. Collection Insee références, pp. 40-62. [Accessed 24 April 2020].

AUBERT, Patrick et RABATÉ, Simon, 2015. Durée passée en carrière et durée de vie en retraite : quel partage des gains d’espérance de vie ?. In : PIB par habitant en longue période – Assurance dépendance – Durée de la retraite et espérance de vie – Mesure de la concentration spatiale. [online]. 19 February 2015. Économie et Statistique, N°474-2014, pp. 69-95. [Accessed 24 April 2020].

BALLOT, Gérard, KANT, Jean-Daniel et GOUDET, Olivier, 2016. Ouvrir dans un nouvel ongletUn modèle multi-agents du marché du travail français, outil d’évaluation des politiques de l’emploi : l’exemple du contrat de génération. In : Revue économique. [online]. July 2016. Vol. 67, n°4, pp. 733-771. [Accessed 24 April 2020].

BARDAJI, José, SÉDILLOT, Béatrice et WALRAET, Emmanuelle, 2003. Ouvrir dans un nouvel ongletUn outil de prospective des retraites : le modèle de microsimulation Destinie. In : Micro-simulation : l’expérience française. [online]. La Documentation française – Économie & prévision, N°160-161, pp. 193-213. [Accessed 24 April 2020].

BARLET, Muriel, BLANCHET, Didier et LE BARBANCHON, Thomas, 2010. Microsimulation et modèles d’agents : une approche alternative pour l’évaluation des politiques d’emploi. In : Bas salaire et marché du travail. [online]. 20 August 2010. Économie et Statistique, N°429-430 – 2009, pp. 51-76. [Accessed 24 April 2020].

BLANCHET, Didier, BOZIO, Antoine et RABATÉ, Simon, 2016. Ouvrir dans un nouvel ongletQuelles options pour réduire la dépendance à la croissance du système de retraite français ?. In : Revue économique. [online]. Vol. 67, n°2016/4, pp. 879-911. [Accessed 24 April 2020].

BLANCHET, Didier, BUFFETEAU, Sophie, CRENNER, Emmanuelle et LE MINEZ, Sylvie, 2011. Ouvrir dans un nouvel ongletLe modèle de microsimulation Destinie 2 : principales caractéristiques et premiers résultats. In : Les systèmes de retraite et leurs réformes : évaluations et projections. [online]. 20 October 2011. Économie et Statistique, n°441-442 – 2011, pp. 101-121. [Accessed 24 April 2020].

BLANCHET, Didier, HAGNERÉ, Cyrille, LEGENDRE, François et THIBAULT, Florence, 2016. Ouvrir dans un nouvel ongletÉvaluation des politiques publiques, ex post et ex ante : l’apport de la microsimulation. In : Revue économique. [online]. July 2016. Vol. 67, n°4, pp. 685-696. [Accessed 24 April 2020].

BOURGUIGNON, François, CHIAPPORI, Pierre-André et SASTRE-DESCALS, José, 1988. SYSIFF: a simulation of the French tax benefit system. In : ATKINSON, A. B. et SUTHERLAND, Holly, 1988. Tax benefit models. December 1988. STICERD Occasional Paper, n°10, p. 395. ISBN : 8-85328-103-3.

DE MENTEN, Gaëtan, DEKKERS, Gijs, BRYON, Geert, LIÉGEOIS, Philippe et O’DONOGHUE, Cathal, 2014. Ouvrir dans un nouvel ongletLIAM2: a New Open Source Development Tool for Discrete-Time Dynamic Microsimulation Models. [online]. 30 June 2014. Journal of Artificial Societies and Social Simulation (JASSS), n°17. [Accessed 24 April 2020].

DUBOIS, Yves et KOUBI, Malik, 2017. Règles d’indexation des pensions et sensibilité des dépenses de retraites à la croissance économique et aux chocs démographiques. [online]. 30 March 2017. Insee, Documents de travail, n°G2017-02. [Accessed 24 April 2020].

DUBOIS, Yves et MARINO, Anthony, 2015. Le taux de rendement interne du système de retraite français : quelle redistribution au sein d’une génération et quelle évolution entre générations ?. In : Microsimulation appliquée aux politiques fiscales et sociales. [online]. 17 December 2015. Économie et Statistique, n°481-482, pp. 77-95. [Accessed 24 April 2020].

LANDAIS, Camille, PIKETTY, Thomas et SAEZ, Emmanuel, 2011. Ouvrir dans un nouvel ongletLe modèle de micro-simulation TAXIPP – version 0.0. [online]. January 2011. Édition Institut des Politiques Publiques. [Accessed 24 April 2020].

LEGENDRE, François, 2019. L’émergence et la consolidation des méthodes de microsimulation en France. In : Numéro spécial – 50e anniversaire. [online]. Économie et Statistique, n°510-511-512 – 2019, pp. 201-217. [Accessed 24 April 2020].

MARINO, Anthony, 2014. Vingt ans de réformes des retraites : quelle contribution des règles d’indexation ?. [online]. 15 April 2014. Insee Analyses n°17. [Accessed 24 April 2020].

ORCUTT, Guy H., 1957. Ouvrir dans un nouvel ongletA new type of socio-economic system. In : The Review of Economics and Statistics. [online]. N°39(2), pp. 116-123. [Accessed 24 April 2020].

POUBELLE, Vincent, ALBERT, Christophe, BEURNIER, Paul, COUHIN, Julie et GRAVE, Nathanaël, 2006. Ouvrir dans un nouvel ongletPrisme, le modèle de la Cnav. In : Retraite et société. [online]. June 2006. La Documentation française, n°48, pp. 202-215. [Accessed 24 April 2020].

THAO KHAMSING, Willy, CECI-RENAUD, Nila et GUILLOT, Lola, 2016. Ouvrir dans un nouvel ongletSimuler l’impact social de la fiscalité énergétique : le modèle Prometheus (PROgramme de Microsimulation des Énergies du Transport et de l’Habitat pour ÉvalUations Sociales) – Usages et méthodologie. In : Études et documents. [online]. February 2016. Service de l’Économie, de l’Évaluation et de l’Intégration du développement Durable (SEEIDD), n°138. [Accessed 24 April 2020].

WATTS, Harold W., 1991. Ouvrir dans un nouvel ongletDistinguished Fellow: An Appreciation of Guy Orcutt. In : The Journal of Economic Perspectives. [online]. Winter 1991. Vol. 5, n°1, pp. 171-179. [Accessed 24 April 2020].