Courrier des statistiques N5 - 2020

What is Data? Impact of External Data on Official Statistics

Official statisticians use an original raw material, namely data: survey data, but also administrative data. They also use other management data that are not the result of an observation process. Understanding this material means exploring its main dimensions, using the definition of data as a triple <concept, domain, value>.

All data are characterized by a set of conventions, about semantics, classifications, formats, etc. Moreover, data exist within a knowledge infrastructure, and they are stored according to non-neutral choices. Data also depend on the environment in which they were born, and on the productive processes that use them. We then see that data cannot be pure and perfect: data are not given, they are side effects of operational processes.

Using efficiently such a material for the purpose of official statistics requires unravelling the implicit set of existing conventions, and building a kind of observation system a posteriori, taking into account the ecosystem in which these data were embedded.

- Data in Statistics

- Data: An Attempt at Characterisation

- Box 1. A Few Insights into Information Theory

- Moving Towards a Three-Dimensional Definition...

- ...The Associated Concept...

- ...The Domain....

- ...The Value

- Datum, Data...

- ...In Databases, Data Warehouses, Data Lakes, Data Streams

- The Data Environment

- The Statistician Versus External Data

- By Way of a Conclusion

Just like cabinetmakers, blacksmiths and stone masons, statisticians work with raw, imperfect materials, full of knots and flaws. They use their own tools and methods to polish, assemble and shape them. These materials that they work with and contribute to creating are data. Data swarm and spring up everywhere, ceaselessly, and in all areas of life, seeming to present a fantastic opportunity for all artisans in the field. However, they are strange, abundant and incredibly heterogeneous materials: they give the appearance of being easily accessible, yet they elude us and resist attempts to give them an operational definition. Everyone has their own perception of data and the idea of restoring the neutrality of the observer is not to be taken lightly. The aim of this article is to equip you with the tools to better understand data and to see the impact that the proposed characterisations have on a statistician’s work.

Data in Statistics

A priori, it goes without saying that there is a link between statistics and data: “Statistics is the discipline that concerns the [study of phenomena through the] collection, organisation, analysis, interpretation, and presentation of data [in a way that makes them understandable to all].”

However, it may also be of interest to look further back in time. A close look at the classic literature on the role of the statistician (Ouvrir dans un nouvel ongletVolle, 1980), or at the many courses in mathematical statistics, reveals that the word “data”, while unquestionably present, plays less of a central role than one might imagine. There is often talk of results of experiments: the concept of experimentation, in this case random, plays a central role in another discipline, namely probability, which is already known to have strong links to the discipline of statistics. Another term that comes up frequently is observations. We think of statistical data as being the results of observations regarding defined variables and the subsequent treatments applied to them. This implies the use of an entire arsenal of means of observation, via a scientific experiment (in the field of epidemiology, for example), or via a survey, among other possibilities.

Admittedly, official statisticians have been venturing beyond this framework for decades, making use of administrative data (typically DADS). However, in some respects, the reporting process has many points in common with the survey process: by bending the concept a little, one could consider that the administrative reports form part of an observation process, even though they are produced for routine operations (Rivière, 2018).

Since the turn of the millennium and particularly over the last ten years or so, there has been a significant paradigm shift: it is no longer down to the statistician alone to build their own data acquisition process, as there are now multiple data sources in the world, whether they be fully open (open data), open subject to conditions (data accessible by researchers), via agreements, or even open in various forms in return for payment. They therefore potentially have access to a very rich array of materials. However, they have no knowledge of how those materials were drawn up and, in particular, there is no guarantee that they are the result of an observational approach.

However, acts such as conducting a survey or a scientific experiment, or organising a reporting process give a very specific and indeed biased view of what data can be. In each of these situations, it is almost like taking a snapshot of a moment in time by examining the current situation, either through questioning or by using sensors to probe the physical world. Observation is a specific method of collecting data, but it is not the only possible means of doing so. If the available data are the result of another approach, the statistician must be aware of this in order to avoid errors when converting the materials or when interpreting the results.

All of this forces us to rethink our understanding of the many facets of the word “data”.

Data: An Attempt at Characterisation

Characterising the concept of data is made all the more delicate by the fact that many of the works on the subject of data simply avoid the issue of its definition. Its etymology provides an original starting point: datum and its plural data come from the Latin dare, which means “to give”. In Howard Becker’s opinion, the choice of this word was an accident of history (Becker, 1952): rather than pointing to “what has been given” to scientists by nature, the focus should have been on what scientists chose to take, the selections that they made from all of the potential data. In order to capture the partial and selective nature inherent in data, it would have been better to choose captum rather than datum.

Let’s try the dictionaries. Here we find several, rather disparate explanations:

- “That which is given, known, determined in the statement of a problem”;

- “Element that serves as a basis for reasoning, a starting point for research”;

- “Results of observations of experiments performed deliberately or in connection with other tasks and subjected to statistical methods”;

- “Conventional representation of information that allows it to undergo automatic processing”.

The usual definitions therefore vary between the status, function, origin and representation of data.

From among the literature on databases, we can cite (Elmasri and Navathe, 2016), a reference work that briefly and incidentally defines the concept: “By data, we mean known facts that can be recorded and that have implicit meaning”. In this case, the medium and the importance of semantics are highlighted, and we also come across the idea of fact.

(Borgman, 2015) studies the subject in greater depth and arrives at the following definition: “[...] data are representations of observations, objects or other entities used as evidence of phenomena for the purposes of research or scholarship”. Here, we find the idea of representing reality (used to define information) and it is interesting to note that this is not simply limited to observations. The words “entity” and “object” appear, with entity subsequently being defined by the author as “something that actually exists”, physically or digitally. However, we are looking here at use in an academic context.

(Kitchin, 2014) dedicated an entire chapter to exploring the word “data”. He explains, for example, the “matter” from which data are made: they are abstract, discrete, aggregable and have a meaning that is independent of their format, medium or context. He also draws a key distinction between fact and data: if a fact is incorrect, it is no longer a fact; however, if data are incorrect, they are still data. Therefore, data are what exists prior to the interpretation that transforms them into facts, evidence and information (Box 1).

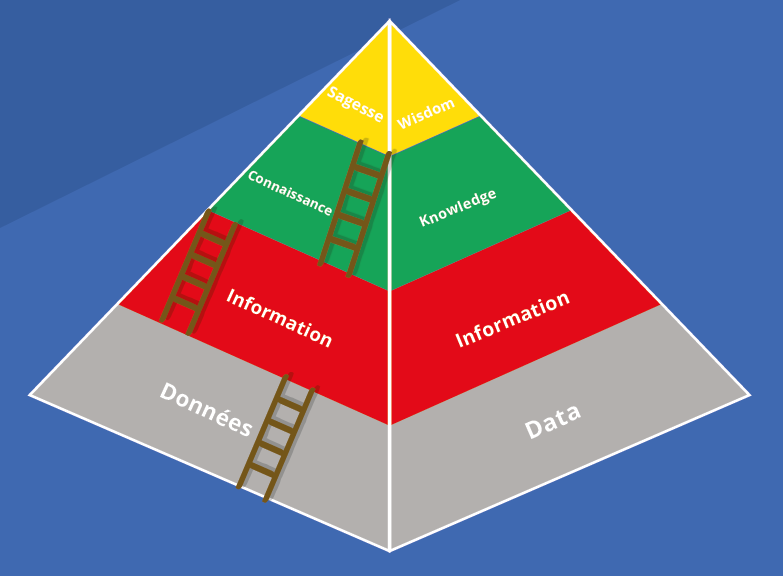

More generally, it is possible to build a pyramid: data > information > knowledge > wisdom (Figure 1), where each layer precedes the next and is deduced from the previous by means of a “distillation process” (abstract, organise, analyse, interpret, etc.), which adds meaning and organisation and reveals connections. This quotation from (Weinberger, 2012) allows us to visualise this: “Information is to data what wine is to the vine”), together with the quotation from (Escarpit, 1991): “To inform is to give form”.

Figure 1. Knowledge Value Chain

Box 1. A Few Insights into Information Theory

Information theory first appeared in 1948, at the confluence of several stories, with the simultaneous arrival of the famous article by Shannon (Shannon, 1948) and the work by Wiener (Wiener, 1948). By introducing a sort of universal grammar of communication, both create a set of concepts and categories that apply to subjects as diverse as telecommunications, control or mechanical calculation (Triclot, 2014). Information theory therefore emerges in a universe of machines and plays an essential unifying role, building bridges between distant disciplines by providing them with a common vocabulary. This innovative work also makes it possible to quantify information: in Shannon’s vision, the backdrop is formed by the problem of performance limits for the compression of messages and their transmission and the decisive use of digital representation, which allows a true theory of code to be created. Shannon is aware of the limitations in this respect and does not believe that a single information design can take account of all possible applications*.

With Wiener, cybernetics turns information into a new dimension of the physical world: it is added to the traditional means of explanation, which are material and energy. It gives rise to a new class of problems in physics by introducing processes for processing information.

With the rise of the media and computerisation, the use of the word is becoming commonplace, but without tending towards a simple or shared definition. Nevertheless, some useful explanations can be given.

Generally speaking, (Ouvrir dans un nouvel ongletBuckland, 1991) identifies three meanings: information-object (data, information documents), information-process (the act of providing information) and finally information-knowledge (resulting from the information process).

(Floridi, 2010) describes it as a commodity with three properties:

- non-rivalry: several people may have the same information;

- non-exclusivity: it is a commodity that is easily shared... and restricting such sharing takes effort;

- zero marginal cost.

Finally, according to (Boydens, 2020), information:

- “results from the construction (shaping) or a representation (perception of reality);

- by means of a code or a language in the broadest sense, namely any system of expression, verbal or otherwise [...] likely to act as a means of communication between animate objects or beings and/or between machines;

- requires a physical substrate in order to be disseminated, whether it be vibrations of the air [...], a sheet of paper [...], an electronic medium [...];

- must be interpreted before it can be used”.

* “The word ‘information’ has been assigned different meanings by different authors in the general field of information theory. It is likely that some of these meanings will prove adequately useful in some applications to merit further studies and permanent recognition. It can hardly be expected that a single information design will satisfactorily capture the many possible applications of this generic field” (Shannon, 1953).

Moving Towards a Three-Dimensional Definition...

One of the difficulties posed by the concept of data is that it involves two very distinct subjects at the same time: on the one hand there is the value (number, code, character string) that can be found on any medium, together with a certain means of presentation and, on the other hand, there is the status of that value, its semantics, what it is supposed to represent. This means mixing operational concerns with more abstract considerations.

If we assimilate data to value, we immediately come up against a contradiction. When we find the number 324 in a file, what are we talking about? The height of the Eiffel Tower in metres? The area of a field in hectares? The melting point of a metal in degrees Celsius? The maximum speed of a vehicle in km/h? The number of inhabitants in a village?

Taken in isolation, the line “324; 3; 1889” is nothing but a string of characters, devoid of any meaning. At best it is a sequence of three numbers separated by semicolons, but it is absolutely not data. The simple fact of specifying that these are the characteristics of the Eiffel Tower, namely its height, number of levels and the year of its inauguration, changes everything and completely changes the status of this insignificant series of figures: intuitively, they are indeed data. The notion of data is therefore inseparable from the concept to which it refers. It also depends on other aspects such as the unit of measurement in this case.

All this naturally leads us to a definition that is often cited, particularly in the literature on data quality (Olson, 2003; Loshin, 2010; Berti-Équille, 2012; Sadiq, 2013). In one of the first major works on the subject, Redman analyses several known definitions and searches for the most appropriate using the following criteria: three linguistic criteria (clarity, correspondence with common usage, absence of mention of the word “information”) and three usage criteria (applicability, possibility of introducing a quality dimension, consideration of the elements of concept and representation) (Redman, 1996).

This process led him to a definition that is well known among computer scientists: data is defined as a triplet (entity, attribute, value), the entity being a model of real world objects (physical or abstract), the attribute being characterised by a set of possible values, or domain. Due to the importance of this last point, we will make a slight change to the wording of the definition proposed by Redman, while remaining true to the initial logic, by suggesting that data be defined by means of the following triplet:

- the concept (for example, the height of a monument), which is itself characterised by a combination of an object (in this case the monument) and an attribute (its height);

- the domain, i.e. the range of possibles: in the case of monument’s height, a positive integer with specification of the unit (metres);

- the value: in the case of the Eiffel Tower, 324.

We will now try to follow the thread of each of these dimensions in order to better understand certain specificities of the concept of data, particularly when it comes to statistical use.

...The Associated Concept...

The concept is nothing more than the supposed meaning of data, what it is supposed to represent, how it can be represented by a definition. Here are a few examples: the area of a field, the turnover of a company, the profession of an employee, but also the size of a monument, the make of a vehicle, the value of a share on the stock market, the number of goals scored by a player, the risk rating of a client, a patient’s diagnosis, etc. At INSEE, definitions such as these are centralised within the RMéS repository (Bonnans, 2019).

These examples serve to verify that the concept is always defined as a specific attribute of an entity or object. In official statistics, in the case of a traditional survey approach or the processing of administrative reports, the entity in question is often an individual (or a household) or a company, but it could also be a dwelling, a construction site, a hospital stay or a school. And in the wording of the concept itself, there is often a tendency to remove the reference to the object, as it is self-evident.

We should add that, strictly speaking, data does not refer to an object in general, but to a given object or entity: what would make sense would be if it were the very precise area of a field, the profession of an identified person, the number of goals scored by a given player, the turnover of such-and-such a company.

The attribute also needs to be specified, particularly in terms of time: the profession on such-and-such a date, the number of goals scored in such-and-such a season, the turnover in such-and-such a year, etc. An object can have many attributes, but some of them play a specific role: identifying traits. These are the attributes that allow the object to be unambiguously identified, and therefore allow instances to be distinguished from one another: the things that naturally come to mind are surname, first name and date of birth in the case of an individual; the address in the case of an establishment; for a hospital stay, it could be the date of admission, the identifier of the medical establishment and the identifier of the individual. One of the features of identifying traits is that they are either irremovable (date of birth) or rarely changed (address).

But let’s return to the objects. Cases where individuals or companies are involved present undeniable advantages that we do not always consider:

- true stability over time;

- indisputable identifying traits, making it possible to locate them and distinguish between them without ambiguity;

- the existence of recognised frames of reference in which these traits are found, as well as identifiers recognised as common references (NIR, SIREN), with relatively transparent and shared registration principles.

Conversely, data likely to be obtained from other sources may refer to objects that are more difficult to understand, as they require inside information: the telephone line, the bank account, the electricity meter. They can even relate to volatile objects: data such as the amount of a transaction or the date of a traffic accident refer to objects (transaction, accident) that are associated with an event and that therefore do not have any temporal consistency.

As a result, there is no reference population for certain types of object that would provide a degree of stability and allow for macroscopic comparisons, consistency checks or margin calibration: for example, it is not easy to draw upon a repository of accidents or transactions to compare with a known total, or establish a framework or a limit. In the case of telephone lines and bank accounts, the operators have this information, but are under no obligation to share it. Furthermore, a statistician or data scientist must be able to work backwards to find entities that are of use for the analysis that they want to perform: for example, at the individual, household or company level. However, on the one hand, there is no bijection between the two (a person can have multiple bank accounts and multiple telephone lines) and, on the other hand, it is not immediately obvious that this link exists between the two (not to mention the legal issues that would arise).

...The Domain....

While the concept that we are discussing remains abstract, the domain requires us to address more operational considerations. (Olson, 2003) describes it as all of the “acceptable values for encoding a specific fact”. He specifies that the domain is generic and independent of the manner in which it will be physically implemented. In order to define the domain, the rules that the values must comply with must be clarified, independently of the applications that will use them. If they are properly defined via a metadata repository, the work of the developers of user applications becomes much easier.

The domain depends on the nature of the value and its type. If it is a quantity, for example, it will often be represented by an interval; negatives can be accepted for a temperature in degrees Celsius, but not for the height of a monument. It can refer to a unit of measurement (degree Celsius, metre), which will form an integral part of the definition of the domain, but this is not mandatory, particularly where whole numbers are involved (number of children).

In the case of a date, the expected formats should be specified, e.g. dd-mm-yy, or yy-mm-dd, and it is often the case that all of the possible entries are defined by an interval, which does not have the same meaning as a numerical interval, given the specific nature of dates (day ≤ 31, month ≤ 12).

If it is an alphanumerical code, the domain is sometimes defined by intension, for example, in the case of identifiers with complex structures within large repositories (NIR or social security number for individuals, SIRET for business establishments), telephone numbers and even names (Elmasri and Navathe, 2015). Characterising the domain using the list of extensions would actually present two disadvantages: not only would the list be too long to explain (there are millions of them), more importantly, it is also constantly evolving. It is therefore better to specify the domain based on the rules restricting the set of possibilities: for example the NIR has a very precise structure with the first character representing the person’s sex, the two following characters representing the year of birth, followed by the month, etc.

The more common approach is to express the set as an extension: this is the approach taken in the case of a questionnaire by linking codes to responses (A – Strongly agree, B – Somewhat agree, C – Somewhat disagree, D – Strongly disagree) or by discretising a quantitative variable (e.g. age brackets).

This list of codes can also be derived from a classification... without actually being one itself. Indeed, classifications such as those for professions, economic activities and diseases are presented in the form of tree structures at different levels (Amossé, 2020); for example, in the French Classification of Activities (NAF), there are levels for section, division, group, class, sub-class, etc. Therefore, if the concept of data is economic activity, the domain cannot be the classification in its entirety: this would need to be a level within that classification or, more generally, any cut-off deemed relevant on the basis thereof, ultimately resulting in a “flat” list.

We could go on and on with other types of data, but in all cases, specifying the domain means naming it and defining a certain number of properties that are to be respected: data typing, coupled with a list of acceptable values, elements such as length restrictions (or any other rules restricting the range of possibilities), rules relating to the presentation of missing values and even more technical points, such as the usable character set.

Basically, specifying a domain also requires choices to be made regarding granularity, which are decisive for subsequent use: the fineness of the description within a classification (cf. the example of the industry classification), the unit of measurement for a distance (m, km, light years), the precision of a date (year, day, minute, second, nanosecond). This also involves linking instructions to the admissible values, commentaries that are indispensable when it comes to creating the data (e.g. in the above example, if you want to say “somewhat agree”, you will enter B) and interpreting them (when someone reads B, they will understand what it means).

With the domain, we therefore establish a semantic and technical convention, shared by all designers and users of data, which, like the concept, is inseparable from the data. Armed with the concept and the domain, we have a kind of receptacle, at least in theory, into which we now need to feed a value (or not, for that matter).

...The Value

With the third element of the triptych, the value, we are entering a more tangible reality. This is the number, code, date or character string that will be linked to a domain and a concept. This time, the latter is instantiated: the value will relate to a well-defined entity and attribute, such as the socio-professional category of a particular employee of a particular establishment (in accordance with the PCS-ESE classification and on 1 November 2020), the mass (in megatons) of the sun, the altitude (in m) of Mont- Blanc, etc.

One thing needs to be clarified here: the value is linked to the instantiated concept, it refers to it… but that does not mean that it represents it faithfully, it provides no guarantee of accuracy at any given time: it is possible to find different values for the altitude of Mont-Blanc depending on where you look; a professional code submitted may be incorrect as a result of it no longer being up to date or because the person who has entered the code made a mistake, etc. This means that no guarantees are provided with regard to the reliability of data by the triptych of concept-domain-value. It makes it possible to provide a basis for the value, to give it a status other than a simple number or a meaningless code, but its reliability relates to other subjects, in particular the way in which the data was constructed.

In the absence of any certainty with regard to quality, the reference to the domain can provide us with a guarantee of conformity, reliability from a syntactic point of view (Batini and Scannapieco, 2016): as a minimum, the data should belong to it (for example, the fact that data representing dates does indeed have the properties that a date must have). Conformity of this nature is common... but not mandatory: this time, everything depends on the checks that would have been performed automatically on the value. The input tools will very often be designed so as to ensure that values can be selected from among the permissible ones displayed on the screen (prepared list of codes), or even to reject the value if it does not fall within the specified domain: this is the case, for example, when data is being collected by an interviewer. When dealing with digitalised and standardised data exchanges, the exchange protocol makes it possible to guarantee properties that go beyond even belonging to a domain: this is the case for social declarations (Renne, 2018). More generally, database management systems implicitly incorporate typing controls. In all of these situations, the structure ensures that the value is compliant, i.e. it falls within the set of admissible values. Nevertheless, in some cases, data may be input or calculated automatically without any checks being carried out and then saved in a file without any guarantee of conformity.

Finally, on a practical level, the value is found on a medium (physical or logical) that has no reason to be the same as the concept and the domain. And it refers to a certain number of coding standards that are, for the most part, completely unknown to the end users.

As was the case for the concept and the domain, the value therefore also brings its own rules, which in this case are of a more technical nature, and the technical choices will therefore already have been made: this applies to the data type, as well as to the way in which missing values are to be taken into account, for example. Therefore, as with the other two elements of the triptych, the value requires conventions. However, with this third and final dimension, we can see that individual reasoning, datum by datum, is largely artificial.

Datum, Data...

In fact, “data” as a material is peculiar in that it cannot be considered alone, as a grain of data separated from the rest. (Borgman, 2015) explains that “[...] data have no value or meaning in isolation; they exist within a knowledge infrastructure – an ecology of people, practices, technologies, institutions, material objects, and relationships”. In order to give them meaning, to extract information from them, they must be compared with their close equals in order to make comparisons and access the necessary environment in which to interpret them.

In addition, data are always presented together as a group, they work in a pack, as it were. Most of the time, this involves several instances of the same concept, for a shared attribute (for example, n individuals and, for each individual, the professional code), or several attributes of the same instance of the object (all of the data included in the tax return submitted by a certain individual), or several temporal specifications for the same instance and the same attribute (for example, the number of employees at a particular company, year by year).

This method of recording data as a group rather than individually requires documented rules for retrieving a specific datum from among a vast data set, and this depends on the way in which the data have been stored. They need to be placed somewhere, but at the same time, consideration must be given to the ways in which they can be retrieved.

There are numerous possibilities for this. Historically, data were presented in a structured file and a traditional technique consisted in characterising each datum using a range of columns within the file as “fixed positional”, or using the number of the order in which the datum appears in a list as “fixed delimited”. Approaches such as these require a large amount of documentation, which is generally quite cumbersome and complex to update.

There are other ways of doing this, such as adopting an XML-type markup language, where we once again come across the idea, at least in part, that the value must be enveloped by a concept and a domain: each value appears between two tags, which are themselves linked to the formal verification rules within the XML schemas, together with a name describing the concept. The characterisation of the data is therefore self-sufficient and no longer dependent on documentation. Another example is the JSON format, which is lighter than XML, less verbose, efficient, but at the same time less rich.

...In Databases, Data Warehouses, Data Lakes, Data Streams

However, the most widespread option is of course to store the data in a database managed by a DBMS (database management system): this brings about excellent standardisation and provides guarantees with regard to the integrity of the data and the language with which they can be accessed (SQL); relational databases offer the possibility of making many changes on a frequent basis and are therefore highly suitable for use in business processes (Ouvrir dans un nouvel ongletCodd, 1970). Another concept, namely data warehouses, are designed to facilitate analysis work, which makes them useful as aids to decision-making: these are then fixed data, which must comply with a binding framework with common axes of analysis and therefore common classifications in particular, even though the information comes from many different sources. The technique involving the use of data lakes also concerns fixed data; however, these are designed to be much less restrictive than data warehouses, even though all of the standardisation work has to be carried out at the time that the data are accessed.

There are even data structuring techniques that are designed for situations in which there is no time to store data (real-time systems in particular): these are known as Data Stream Management Systems, or DSMS (Garofalakis, Gehrke et Rastogi, 2016).

All in all, there are numerous methods for recording data in an organised manner; the differences in the methods should not be perceived as being of a technical nature, but rather as a function of the intended use of the data (management, decision-making, real time) and any possible constraints on them (in terms of volume, in particular). In each case, we come across the concept and the domain in some form, be it as (meta)data or in the associated documentation. Finally, it should be noted that reasoning on a dataset rather than on a single datum gives rise to other concepts: first of all, there is a need for overall coherence, from both a structural and a semantic point of view. The scope that this dataset represents becomes a subject of interest that is to be analysed as such and that fully involves statisticians. Finally, knowledge of the information source associated with this dataset represents a quality criterion (Loshin, 2010). This means that data cannot be approached without questioning the ecosystem in which it is found.

The Data Environment

The triplet (concept, domain, value) provides a useful framework, but is not enough to exhaust everything that the material of “data” carries with it.

Data are not a given, they are not inherent in nature: their existence results from a need; they are embedded in an environment. The fact that they are very strictly defined does not make them pure and perfect, because they are intrinsically linked to a use. They are only there to contribute to an objective and not to make reference information available to the public.

(Ouvrir dans un nouvel ongletDenis, 2018) therefore seeks to “unravel the data threads”. He gives the simple example of a death: here, the concept and domain are easy to define. However, he highlights the large number of players who are concerned by this information, and the way in which the uses vary depending on their activities: insurance companies, administration, etc. For example, insurers require proof: the fact that a person is considered dead in their computer system means that there is proof. However, a dead person may be registered as living within the same system, simply because the proof has not been submitted: as a result, the data does not reflect the true information, it prepares for a use within the scope of the business process. More generally, the desired data may result from events for which there is no guarantee that they will occur and be declared at the same time (for example, the cessation of a company’s activities).

Another example: when a person retires, the amount of the pension they receive depends on their career data. The majority of these data are submitted automatically, but there are some that are not and in some cases, the future retiree needs to submit proof of their career (pay slips in particular) at the last minute. Therefore, the career data of an individual who is not close to retirement may still contain “gaps” as a result of the fact that certain periods of employment have not yet been declared: the career as it appears in the operational system will therefore not correspond to the actual career.

The available data are therefore not necessarily as close to the evaluation of the concept, to the “true data”, as one would imagine. The business processes do not aim to produce “the truth”, but this does not mean that the data are “bad”. To understand the data is to understand the objective, the rules of the processes that gave rise to them.

More generally, where data are derived from a legal framework (e.g. social security), it is inevitable that there will be discrepancies in the data as they should appear in accordance with the law, i.e. data as they would be if one had a perfect view of reality... and data such as they are found in the databases (Boydens, 2000). This means that there is a discrepancy between the theoretical data and the data actually recovered, which is linked to reactivity with regard to events. This allows for a different approach to the “quality” of data: quality relates to the data’s fitness for use rather than to its accuracy.

It is therefore necessary to understand where data come from, how they come about (sensor, input interface, automatic calculation, etc.) and to have knowledge of the event triggering the creation of data, as well as the subsequent processes that make use of those data (e.g. triggering of a benefit or a refund). We then see data less as an absolute truth and more as an essential link in a complex chain. This can help us to identify the reasons why data are missing (see the example of career data for a pension scheme) or inaccurate without the business process being deficient (see the example of a death as seen by an insurance company).

Compared with the previous examples, the traditional methods of obtaining data in statistics, namely surveys, appear to be an oddity: the data are not directly linked to a use by a downstream business process, they are simply used to feed into the calculation of aggregated data, statistics, which are a goal in themselves.

The Statistician Versus External Data

In a digitised world where data are a constant feature in our lives and those of our organisations, people tend to think that the increase in the number of sources of data on all subjects would make a statistician’s job easier. To coin a phrase, they’re practically tripping over them.

This view is largely incorrect, as it is based on a significant misunderstanding with regard to the meaning of the word “data”: what they can actually access, more or less easily, are considerable quantities of values (numbers, codes, descriptions, dates, etc.), but not the equivalent in data. This is because these values remain dead letter unless you have a robust key that allows them to be interpreted: the concept, domain, and also the scope need to be adequately explained. These values cannot be used for analytical purposes on the basis of weak or non-existent conventions or vague, insecure and implicit characterisations.

The primary responsibility of a statistician when confronted with external datasets is to reveal and unravel a web of conventions underlying those datasets (Martin, 2020): conventions concerning the definition, objects, time scales, nomenclatures, formats, missing values, etc., all of which will allow them to reconstitute the concept-domain-value triptych and to characterise the population covered. The existing conventions must be actively sought and used, as they are important allies: accounting standards, for example, are of great use to business statisticians (they offer guarantees of quality), as well as the legal bases of concepts, which can lead to the quality of the data being established or, on the contrary, a need to remedy any deviations.

But this is not enough: the living, evolving data linked to business processes must be replaced with observations. These should be fixed in time at the same moment t (for example, the situation of companies as at 31/12/2019) or over the same period (for example, company activities during the 2019 calendar year), and must refer to objects that are separate from and homogeneous with one another, as well as their attributes. This homogenisation work is a key element of the work of a statistician, but it actually began long before official statistics with the metric system and the unification of weights and measures (Desrosières, 1993).

In other words, it is a case of reconstructing a posteriori a pseudo-observational device using data that were not designed for this purpose. In order to do so, public statisticians use a powerful set of conventions, which is widely shared: registers, classifications, definitions of concepts, statistical units, notation conventions, which translate into a vast set of metadata. The classifications are widely coordinated (those belonging to INSEE or the ATIH, for example), and the conventions relating to the reference populations allow for the calibration, monitoring and comparison of the statistics obtained.

With uncontrolled external data, the statistician will also be faced with a multitude of shortcomings within those data, which can cast doubt on them and their quality. And this is still true even if they are perfect for their intended purpose. In order to avoid this classic pitfall, the statistician will need to understand the operational environment of the original data to determine the way in which they can be used. This will have an impact on the steps for the automatic and manual check and for the imputation of dubious or missing values. Matching and therefore the identification of objects forces the statistician to question their meaning and stability, as well as their links to the statistical units under consideration (Christen, 2012). The final validation of the aggregates and their interpretation will refer to the meaning of the reference population covered.

These external data are just one of the inputs to a statistician’s work and they are used to develop new data, in this case aggregated data known as “statistics”. As data, they will have their own characterisations with a particularly stringent requirement with regard to consistency, which highlights the essential role of metadata.

By Way of a Conclusion

There is no data in nature. None whatsoever. In other words, data are not given, they have to be constructed, taken (captum vs datum). They require upstream modelling work, abstraction, specification of concepts and domains, before you can even think about producing values. They are themselves dependent on choices linked to uses. However, they do exist in the vast digital world, and it seems logical that official statistics is looking into ways in which these can be used effectively for its own needs, even if it means inventing new ones suitable for fuelling the public debate.

This represents a profound change for public statisticians, even though they have long-standing experience of using administrative data. Up until the 19th century and even at the start of the 20th century, the census was the main format used by official statistics. During the second half of the 20th century, surveys took on a leading role with end-to-end process that made use of a veritable arsenal of mathematical and technical resources. Without taking away from the other formats, the 21st century would belong to data: statisticians are also computer scientists, explorers of the digital realms and are, in fact, data scientists. However, if we reread (Ouvrir dans un nouvel ongletVolle, 1980), we can see that the foundations remain the same: registers, classifications, codes, definitions, statistical units, everything that we now group together under the heading metadata. In addition to the key role taken by IT, the other major change in the business lies in the need to immerse oneself in the original functions of the data in order to make better use of them. It is this need for openness to external processes (and not just to user needs), this versatility, this agility, this curiosity tinged with great rigour, that will be the hallmark of a new generation of statisticians.

Paru le :15/09/2022

See Wikipedia, Statistics.

Annual Declaration of Social Data.

We also find other characterisations, which are those of information technology, but we will discuss these in the next section.

See the chapter entitled “Conceptualising data”, pp. 2-26.

... although information does not systematically rely on a data layer (cf. the alarm call of an animal when a predator is present).

The definition is equivalent, we are not adding anything, nor are we taking anything away, this simply serves to facilitate subsequent developments by clearly separating the semantic (concept) dimension from the more technical dimension (the range of values).

This term is often used in computer science: we will talk about class (referring to the entity in general) and the instance of a class. For example, the monument is the abstract entity, the class, and the Eiffel Tower or the Taj Mahal are instances of that class.

For example, (Loshin, 2010) puts forward several data quality criteria, including the identifiability of an object (p. 142 and 144).

Registration index number (for natural persons) and company identification number in the Sirene register.

Like the RMéS repository, described in (Bonnans, 2019).

Olson highlights (p. 145) that, in practice, few companies actually implement this. By way of examples, he points to dates, postal codes and units of measurement.

Strictly speaking, nature and type are two distinct properties: for example, the CSP is a code made up of two figures; that is its nature. Therefore, 21 > artisans. This code can be represented by the number 21 or by the character string “21”: these are two different data types.

A symbol made up of a succession of characters that can be either numbers or letters.

In other words, it is better to define it by its properties rather than by the list of its elements.

Lexical structures of this type can be defined mathematically using a dedicated language, that of regular expressions: see the example of the technical specification for the Nominal Social Declaration (DSN) (Ouvrir dans un nouvel ongletCNAV, 2020), pp. 71-73.

See (Olson, 2003), p. 149, and also the technical specification for the DSN (Ouvrir dans un nouvel ongletCNAV, 2020), referred to above.

The concept also includes a choice with regard to granularity, for example, the use of a geographical level that is more or less fine.

Classification of professions and socio-professional categories of salaried employment of private and public employers.

The authors distinguish between syntactic accuracy and semantic accuracy. The first relates to the adequacy of the domain and is independent of its reliability; the second is the proximity to the assumed correct value. (Ouvrir dans un nouvel ongletVolle, 1980) makes the same distinction, see p. 60.

In the case of characters, the ASCII and EBCDIC standards, for example.

Fixed positional: the position of a datum is indicated by the start and end columns between which it is located, for example, a first name between columns 31 and 50.

Fixed delimited: the values are separated by delimiters, and we will know, for example, that the datum we are looking for is the third in the sequence.

Extensible Markup Language.

JavaScript Object Notation.

This is known as On-Line Analytical Processing (OLAP). The first papers on this subject date back to 1993 and once again include Codd, who designed the relational databases.

See the structural and semantic coherence (pp. 137-139) and the source (lineage), as a quality criterion (pp. 135-136).

With the exception of some specific cases... most notably statistical data..

See pp. 18-19.

The most recent version of the RGCU (Single Career Management Directory) is expected to bring about significant improvements.

“Quality is fitness for use” (Juran, 1951).

See pp. 186-191 with regard to the importance of conventions.

Agency for Information on Hospital Care.

Example: in the case of the afore-cited vital status (Ouvrir dans un nouvel ongletDenis, 2018), it would be better to leave the data as is in the event of death and perhaps perform imputation for a person assumed to be alive beyond a certain age.

Take, for example, the mobility statistics during the 2020 health crisis, which would have been extremely difficult and costly to build using traditional surveys (see the article by Jean-Luc Tavernier in this issue).

Pour en savoir plus

AMOSSÉ, Thomas, 2020. La nomenclature socioprofessionnelle 2020 – Continuité et innovation, pour des usages renforcés. In: Courrier des statistiques. [online]. 27 June 2019. N° N4, pp. 62-80. [Accessed 1 December 2020].

BATINI, Carlo et SCANNAPIECO, Monica, 2016. Data and Information Quality – Dimensions, Principles and Techniques. Springer. ISBN 978-3-319-24104-3.

BECKER, Howard, 1952. Science, Culture, and Society. In: Philosophy of Science. October 1952. The Williams & Wilkins Co. Volume 19, n° 4, pp. 273–287.

BERTI-ÉQUILLE, Laure, 2012. La qualité et la gouvernance des données au service de la performance des entreprises. Lavoisier-Hermes Science, Cachan. ISBN 978-2-7462-2510-7.

BONNANS, Dominique, 2019. RMéS, le référentiel de métadonnées statistiques de l’Insee. In: Courrier des statistiques. [online]. 27 June 2019. N° N2. [Accessed 1 December 2020].

BORGMAN, Christine L., 2015. Big Data, Little Data, No Data – Scholarship in the Networked World. The MIT Press. ISBN 978-0-262-02856-1.

BOYDENS, Isabelle, 1999. Informatique, normes et temps – Évaluer et améliorer la qualité de l’information : les enseignements d’une approche herméneutique appliquée à la base de données « LATG » de l’O.N.S.S. Éditions E. Bruylant. ISBN 2-8027-1268-3.

BOYDENS, Isabelle, 2020. Documentologie. Presses Universitaires de Bruxelles, Syllabus de cours. ISBN 978-2-500009967.

BUCKLAND, Michael K., 1991. Ouvrir dans un nouvel ongletInformation as Thing. In: Journal of the American Society for Information Science. [online]. June 1991. 42:5. pp.351-360. [Accessed 1 December 2020].

CHRISTEN, Peter, 2012. Data Matching – Concepts and Techniques for Record Linkage, Entity Resolution, and Duplicate Detection. Springer. ISBN 978-3-642-31164-2.

CNAV, 2020. Ouvrir dans un nouvel ongletCahier technique de la DSN 2021-1. [online]. 14 January 2020. [Accessed 1 December 2020].

CODD, Edgar Franck, 1970. Ouvrir dans un nouvel ongletA Relational Model of Data for Large Shared Data Banks. In: Communications of the ACM. [online]. June 1970. Volume 13, n° 6, pp. 377-387. [Accessed 1 December 2020].

DENIS, Jérôme, 2018. Ouvrir dans un nouvel ongletLe travail invisible des données – Éléments pour une sociologie des infrastructures scripturales. [online]. August 2018. Presses des Mines, Collection Sciences Sociales. [Accessed 1 December 2020].

DESROSIÈRES, Alain, 1993. La politique des grands nombres – Histoire de la raison statistique. Réédité le 19 August 2010. Éditions La Découverte, collection Poche / Sciences humaines et sociales n°99. ISBN 978-2-707-16504-6.

ELMASRI, Ramez et NAVATHE, Shamkant B., 2015. Fundamentals of Database Systems. 8 June 2015. Pearson, 7ᵉ édition. ISBN 978-0-13397077-7.

ESCARPIT, Robert, 1991. L’information et la communication – Théorie générale. 23 January 1991. Hachette Université Communication. ISBN 978-2-010168192.

FLORIDI, Luciano, 2010. Information – A very short introduction. February 2010. Oxford University Press. ISBN 978-0-199551378.

GAROFALAKIS, Minos, GEHRKE, Johannes Gehrke et RASTOGI, Rajeev, 2016. Data Stream Management – Processing High-Speed Data Streams. Springer. ISBN 978-3-540-28607-3.

JURAN, Joseph M., 1951. Quality-control handbook. McGraw-Hill industrial organization and management series.

KITCHIN, Rob, 2014. The Data Revolution – Big Data, Open Data, Data Infrastructures and Their Consequences. SAGE Publications. ISBN 978-1-4462-8747-7.

LOSHIN, David, 2010. The Practitioner’s Guide to Data Quality Improvement. 15 October 2010. Morgan Kaufmann. ISBN 978-0-080920344.

MARTIN, Olivier, 2020. L’empire des chiffres. 16 September 2020. Éditions Armand Colin. ISBN 978-2-20062571-9.

OLSON, Jack E., 2003. Data Quality – The Accuracy Dimension. [online]. January 2003. Morgan Kaufmann. ISBN 1-55860-891-5.

REDMAN, Thomas C., 1997. Data Quality for the Information Age. January 1997. Artech House Computer Science Library. Pp. 227-232. ISBN 978-0-89006-883-0.

RENNE, Catherine, 2018. Bien comprendre la déclaration sociale nominative pour mieux mesurer. In: Courrier des statistiques. [online]. 6 December 2018. N° N1, pp. 35-44. [Accessed 1 December 2020].

RIVIÈRE, Pascal, 2018. Utiliser les déclarations administratives à des fins statistiques. In: Courrier des statistiques. [online]. 6 December 2018. N° N1, pp. 14-24. [Accessed 1 December 2020].

SADIQ, Shazia, 2013. Handbook of Data Quality : Research and Practice. Springer. ISBN 978-3-642-36257-6.

SHANNON, Claude Elwood, 1948. A Mathematical Theory of Communication. In: The Bell System Technical Journal. July 1948. Volume 27, N° 3, pp. 379-423.

SHANNON, Claude Elwood, 1953. The lattice theory of information. In: Transactions of the IRE Professional Group on Information Theory. February 1953. Volume 1, n° 1, pp.105-107.

TRICLOT, Mathieu, 2014. Le moment cybernétique – La constitution de la notion d’information. Champ Vallon. ISBN 978-2-876736955.

VOLLE, Michel, 1980. Ouvrir dans un nouvel ongletLe métier de statisticien. [online]. Éditions Hachette Littérature. ISBN 978-2-010045295. [Accessed 1 December 2020].

WEINBERGER, David, 2012. Too Big to Know. 1 January 2012. Éditions Basic books, New York, p.2. EAN 978-0-465021420.

WIENER, Norbert, 1948. Cybernetics – Or Control and Communication in the Animal and the Machine. 1961, 2ᵉ édition. The MIT Press, Cambridge, Massachusetts. ISBN 978-0-262-73009-9.